The Kubernetes scheduler ensures that all pods get matched to the right nodes for the Kubelet to run them. The entire mechanism often delivers excellent results, boosting availability and performance. However, the default behavior is an anti-pattern from a cost perspective. Running pods on only partially occupied nodes can lead to increased cloud expenses and the problem worsens with workloads that require GPUs.

Due to their ability to parallel process multiple data sets, GPU instances are now popular for the training and inference of generative and large language models.

They execute tasks faster but can result in huge bills when combined with poor scheduling. Not to mention, getting enough of them may be tricky due to GPU shortages.

One of CAST AI’s users, a company developing an AI-driven security intelligence product, faced this challenge. By taking advantage of our platform’s node templates and autoscaler, their team was able to successfully provision and improve the performance of workloads requiring GPU-enabled instances.

Discover how node templates can improve how the Kubernetes scheduler handles your GPU-heavy workloads.

The difficulty of scheduling GPUs in Kubernetes

Kubernetes’ default scheduler, Kube-scheduler, runs as part of the control plane.

It chooses nodes for unscheduled pods that have just been created. By default, the Kubernetes scheduler attempts to distribute these pods evenly.

The Kubernetes scheduler identifies and scores all feasible nodes for your pod, then picks the one with the highest score and notifies the API server about this decision. In this process, it considers several factors, such as resource requirements, hardware and software constraints, affinity specs, etc.

Overall, its decision-making process can deliver quick results thanks to automation.

Features like inter-pod affinity/anti-affinity, node selector, and node affinity give you some control over the Kubernetes scheduler’s decisions, but they are still generic and often pricey.

This is because the Kubernetes scheduler doesn’t account for costs.

Sorting out expenses always falls on the engineer’s plate, and this issue can be acute in applications requiring GPU jobs, as their rates are steep.

Costly decisions of the Kubernetes scheduler

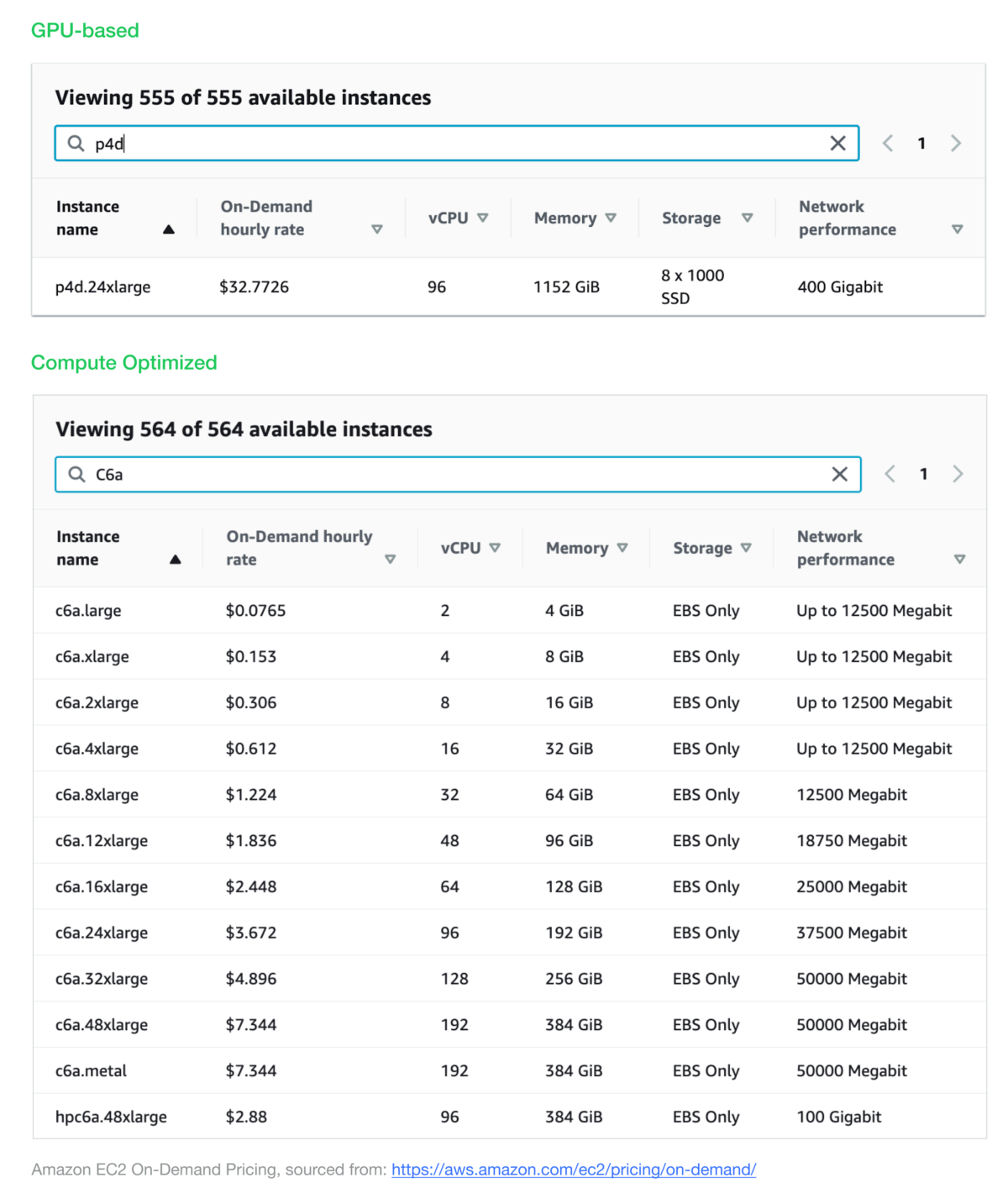

To better understand the impact of costly scheduling decisions, let’s look at Amazon EC2 P4d.

EC2 P4d was specifically designed for machine learning and high-performance computing apps in the cloud. Its NVIDIA A100 Tensor Core GPUs allow for top throughput and low latency networking, in addition to supporting 400 Gbps instance networking.

P4d guarantees a 60% drop in ML model training expenses and offers 2.5x better deep learning performance than previous P3 instance models.

Despite its impressive features, the hourly on-demand price far exceeds that of popular instance types like C6a.

Controlling the Kubernetes scheduler’s decisions with more precision is therefore crucial.

Unfortunately, modifying the Kubernetes scheduler settings on GKE, AKS, or EKS is limited without using MutatingAdmissionControllers.

Proceeding cautiously is necessary when authoring and installing webhooks as it may not be an entirely reliable solution.

Taming the Kubernetes scheduler

One of CAST AI’s users faced precisely this challenge. The company has created an AI-powered intelligence solution that detects threats from social and news media in real-time.

The product’s engine detects emerging narratives by analyzing millions of documents simultaneously; it can also create unique NLP models for intelligence and defense through automation.

The volume of classified and public data the product uses has constantly been growing. As a result, workloads often require GPU-enabled instances, which incur extra costs and work.

Node pools, sometimes also called Auto Scaling groups, can save a lot of effort. But while they can streamline provisioning, they can also lead to paying for capacity that’s not needed.

CAST AI’s autoscaler and node templates empower you to control and reduce costs. And with the fallback feature, you get the advantage of spot instance savings while ensuring capacity during spot unavailability.

How node templates help

CAST AI client now runs their workloads on predefined groups of instances. Rather than having to pick specific instances manually, the team can broadly define the requirements, like “Memory-optimized” and “GPU VMs,” and the autoscaler follows suit.

Thanks to this feature, the team can now utilize different instances more freely.

Whenever AWS introduces new, high-performance instance families, CAST AI enrolls you for them automatically, eliminating the need to enable them manually.

This is unlike node pools, which require you to keep up with new instance types and adjust your configurations accordingly.

When creating a node template, our client specified general requirements like instance types, the lifecycle of the new nodes, and provisioning configs.

The team also added constraints such as the instance families they wished to avoid (p4d, p3d, p2) and the preferred GPU manufacturer (in this case, NVIDIA).

CAST AI followed these requirements and picked matching instances. The autoscaler adheres to these constraints when adding new nodes and removes expensive resources right after the GPU jobs are done.

Squeezing more out of GPUs with spot instances

Node templates come with one more benefit. When used with spot instance automation, they allow our client to save up to 90% of hefty GPU VMs costs without the negative consequences of spot interruptions.

Spot prices can vary drastically for GPUs, so it’s essential to select the most optimal ones at the time.

CAST AI’s spot instance automation removes this task from your plate, while ensuring the right balance between the most diverse and cheapest types.

The on-demand fallback can be advantageous during mass spot interruptions or low spot availability.

Improperly saved or interrupted training processes in deep learning workflows can result in significant data loss. If AWS pulls out all EC2 G3 or p4d spots that your workloads are utilizing, an automated fallback can prevent a lot of trouble.

Steps to create a node template

You can create a node template in three different ways, and it’s a relatively quick process.

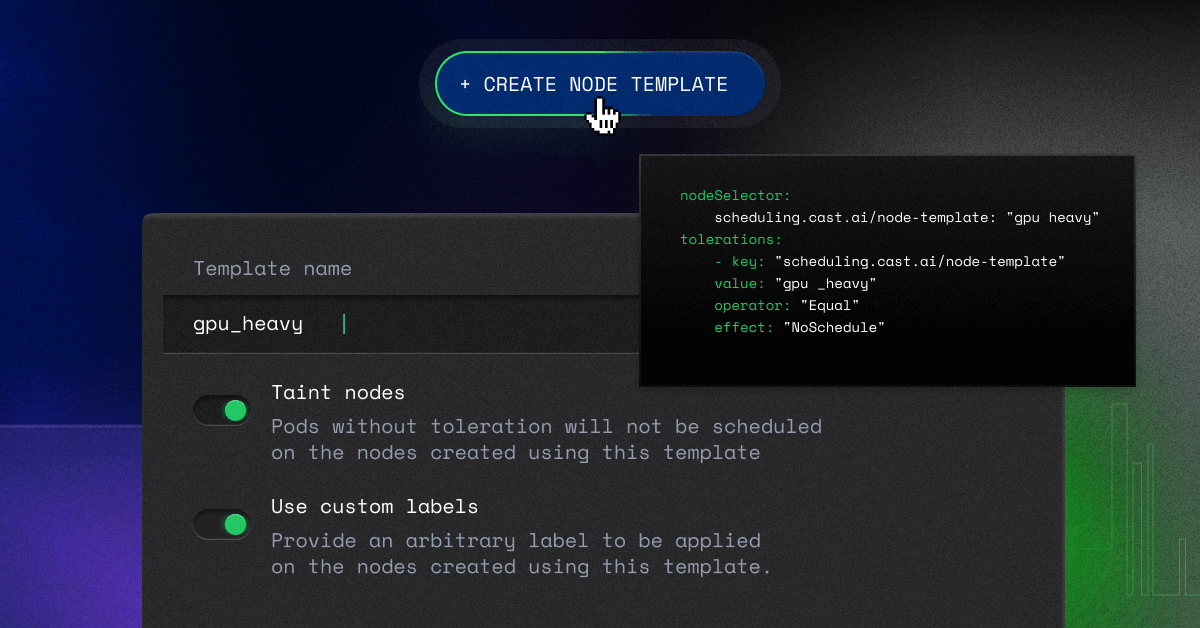

If you have already connected and onboarded a cluster, using CAST AI’s UI is a breeze.

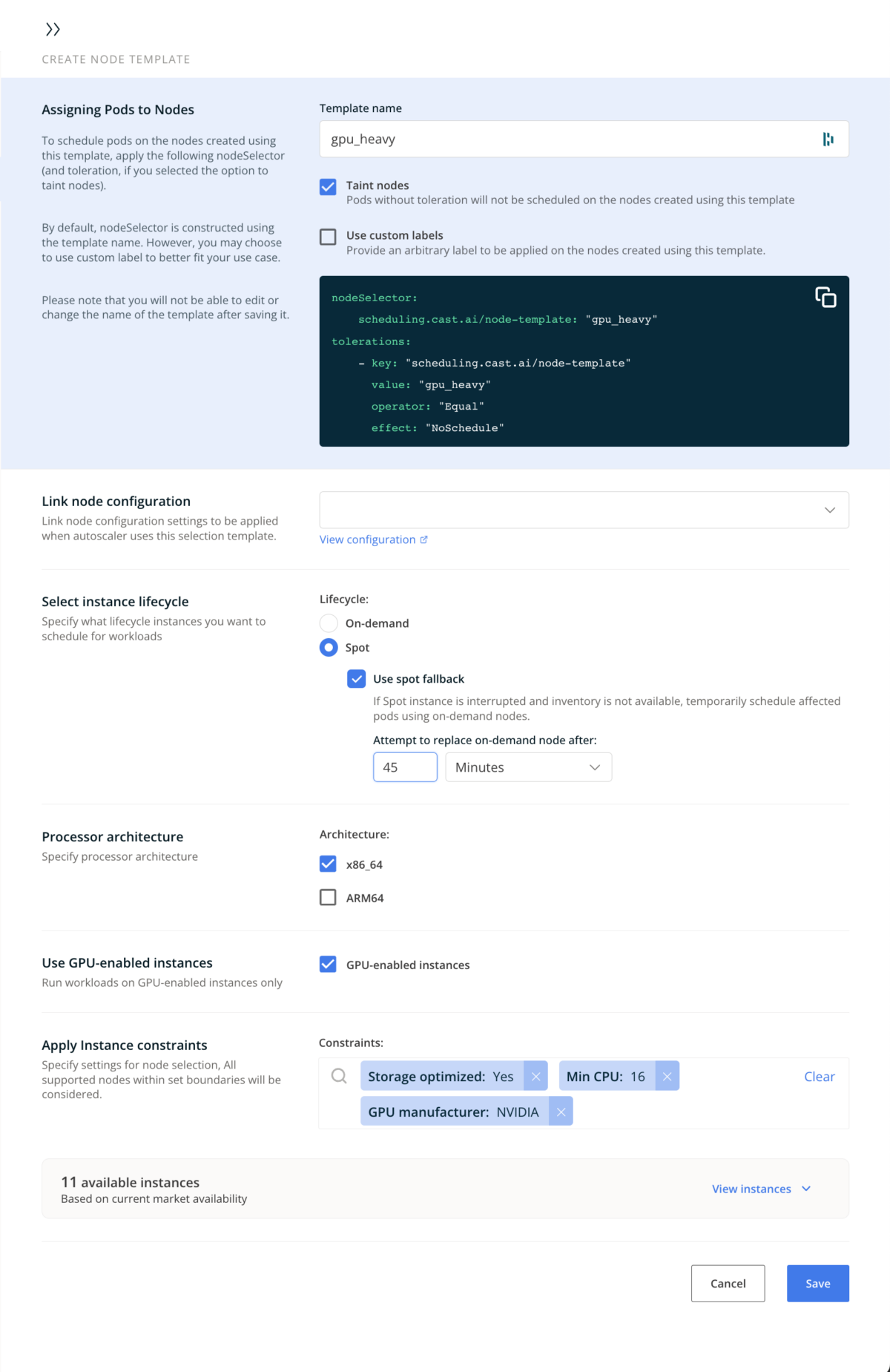

Access your CAST AI account and follow the on-screen instructions in the Autoscaler feature. This is what you will see:

After naming the template, select if you wish to taint the new nodes and avoid assigning pods to them. Using the template, you can also specify a custom label for the nodes you create.

You can then link your template to a relevant node configuration and decide whether to use it with spot or on-demand nodes only.

You also get a choice of processor architecture and the option to use GPU-enabled instances. If you select this preference, CAST AI will automatically run your workloads on relevant instances, including any new families released by your cloud provider.

Finally, you can also add restrictions such as :

- Compute-optimized, which helps select instances for apps needing high-performance CPUs.

- Storage-optimized, which picks instances for apps requiring high IOPS.

- Additional constraints, such as Instance Family, minimum and maximum CPU and memory limits.

But in reality the fewer constraints you have, the better matches and cost savings you get – and CAST AI’s engine will handle that.

Apart from the UI, you can also create node templates with Terraform (refer to GitHub) or API (check the documentation).

Over to you

Kubernetes scheduling can pose a challenge, particularly for applications relying heavily on GPUs.

Although the Kubernetes scheduler automates the provisioning process and delivers fast results, it may be too expensive and general for your application’s specific needs.

Node templates let you achieve better performance and flexibility of GPU-intensive workloads. The feature also ensures that once a GPU job is completed, the autoscaler decommissions the expensive resource and gets something more affordable for your application’s new requirements.

We’ve observed that this trait contributes to the faster and more reliable building of AI applications — and we hope you’ll also find it beneficial.

CAST AI clients save an average of 63%

on their Kubernetes bills

Book a technical call and see how to streamline scheduling your GPU workloads with node templates.

Leave a reply