Building an AI solution sounds like a cool challenge with massive potential for business impact. But ask any finance manager and they’ll instantly point out the risk. The margin for AI software is smaller than for typical SaaS because of the incredibly high compute requirements AI poses on both its makers and users.

The enormous cost of training and running (inference) generative and large language models (LLMs) is a structural expense that makes this advancement different from previous technological leaps. This type of AI model requires a massive amount of computational resources since it performs billions of computations every time it responds to a prompt.

Such computations also demand specialized hardware. Traditional computer processors can run ML models, but they’re very slow. That’s why most training and some inference take place on GPU instances, which are ideal for simultaneous processing of various data sets.

Cloud cost management solutions can help lower the cost of training and inference on many fronts, especially if you use Kubernetes.

Keep reading to learn how.

Reducing model training and production costs

Many companies use Kubernetes to containerize their applications, and that includes AI models. There’s just one caveat. Kubernetes scheduling ensures that pods are assigned to the correct nodes, but the process is all about availability and performance, with no consideration to cost-effectiveness.

Kubernetes pods operating on half-empty nodes come with a high price tag, especially in GPU-intensive applications. Improper scheduling can easily build a massive cloud bill.

But there are a few things you can do to prevent your cloud bill from skyrocketing.

Autoscaling with node templates

To solve this problem for one of our customers, we developed a mechanism for provisioning and scaling cost-effective GPU nodes for training.

CAST AI’s autoscaler and node templates automate the provisioning process that engineers would otherwise have to carry out manually.

Workloads run on predefined groups of instances. Instead of choosing specific instances manually, engineers can define their characteristics broadly – for example, “GPU VMs,” and the autoscaler does the rest. Expanding the choice of machines is a smart move considering the current server shortages.

Once the GPU jobs are done, the CAST AI autoscaler decommissions the GPU instances and replaces them with more cost-efficient alternatives. All of this happens automatically, without anyone having to lift a finger.

You can learn more about this feature in the documentation.

Optimizing and autoscaling CPU and GPU spot instances for inference

Spot instances are the most cost-effective instance option in AWS, potentially helping you save 90% off on-demand pricing. But they might get interrupted at any time with only 2 minutes of warning. The warning times on Google Cloud and Azure are even shorter, at 30 seconds.

CAST AI has a mechanism in place to deal with that by automating spot instances at every stage of their lifecycle.

The platform identifies the optimal pod configuration for your model’s computation requirements and automatically selects virtual machines that meet workload criteria while choosing the cheapest instance families on the market.

If CAST AI can’t find any spot capacity to meet a workload’s demand, it uses the Spot Fallback feature to temporarily run the workload on on-demand instances until spot instances become available. Once that happens, it seamlessly moves workloads back to spot instances, provided that users request this behavior.

Pricing prediction for smarter workload execution planning

CAST AI features pricing algorithms that forecast seasonality and trends using cutting-edge ML models, allowing the platform to determine the ideal times to run batch jobs. If consumers don’t need workloads to run immediately, this results in considerable cost savings.

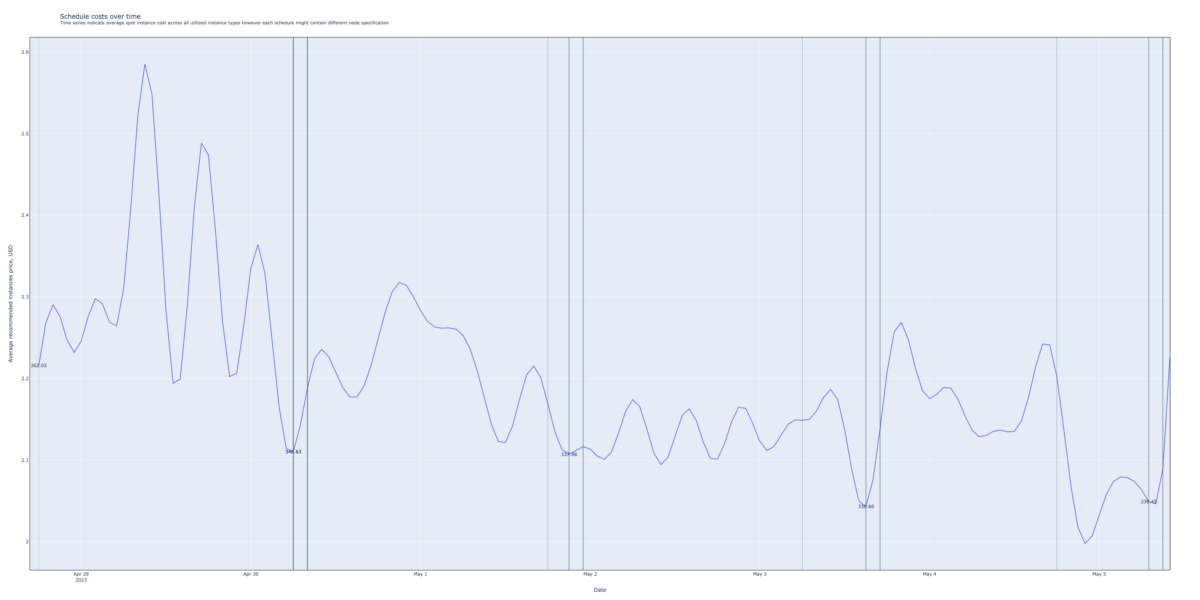

Example

This simplified chart contains the price of single workload running and using two different types of EC2 instances. The when-to-run model calculates a price and optimal pod configuration given inputs such as CPU, RAM requests, predicted future price and others.

The X axis identifies date and the Y, the total price of single workload run hour. If you’d run the workload immediately, you’ll pay ~360$ per whole run. If you waif for couple of days – that workload run would cost you 5% less.

All of these built-in benefits enable teams to plan cloud budgets efficiently, resulting in a substantially higher spot instance fulfillment rate and improved savings.

CAST AI generated 76% cloud cost savings for a customer using EKS to train and run AI models

Support for AWS Inferentia

INF is a great processor for inference if your team uses their SDK/runtime. CAST AI can create node templates just for this type of instance. AWS just released their latest version of Inferentia, INF2, which is purpose-built for deep learning use cases.

If you’re not familiar with Inferentia processors, here is a quick primer.

AWS Inferentia is a family of purpose-built ML inference processors built to deliver high performance and low cost inference for various applications. These processors are designed to be highly efficient at processing DNNs via a custom-built deep learning inference engine optimized for inference workloads. This engine supports many popular neural network architectures such as ResNet, VGG, and LSTM, among others.

You can get Inferentia processors in various configurations, and then use them with AWS services such as Amazon SageMaker and AWS Deep Learning AMIs to deploy and scale ML inference workloads.

Easier Nvidia drivers configuration

Additionally, CAST AI handles all of the housekeeping around the Nvidia driver, from installation to configuration. This is key because any misconfiguration may result in CPU instead of GPU usage. This frees up your engineers’ time for more impactful tasks.

Next steps: time-slicing and more features

GPU time slicing is one of the upcoming features we’re planning to add specifically for teams building and running large AI models.

GPU time slicing is a technique used to share a single physical GPU among multiple Kubernetes pods. Each pod is allocated a portion of the GPU’s processing power, which allows multiple applications to run simultaneously on one physical GPU.

This is particularly useful in cloud environments, where resources are often shared among multiple users or applications. By using GPU time slicing, providers can offer GPU acceleration to a larger number of users while making sure that each user gets a fair share of the GPU’s processing power.

One of the key benefits of GPU time slicing is that it allows users to run GPU-accelerated applications without having to allocate their own dedicated GPU instances, slashing the cost of running GPU-accelerated workloads.

Cut your AI/ML cloud spend

CAST AI is a Kubernetes management platform that continuously analyzes and optimizes compute resources to support engineers at every step of the way.

After connecting your cluster, you’ll see suggested recommendations and can implement them automatically for immediate cost reduction in your AI project.

If you have unique GPU workload use cases or have had trouble scaling GPU-heavy workloads effectively, we would love to hear from you.

CAST AI clients save an average of 63%

on their Kubernetes bills

Book a call to see if you too can get low & predictable cloud bills.

Leave a reply