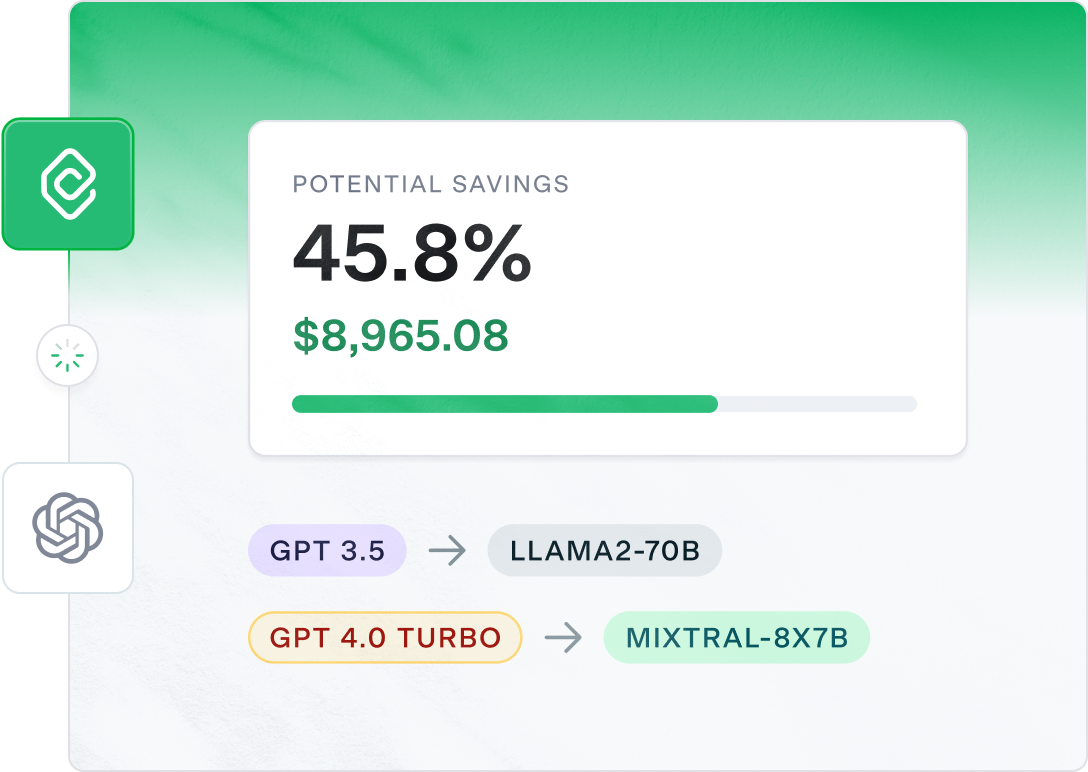

Reducing large language model costs

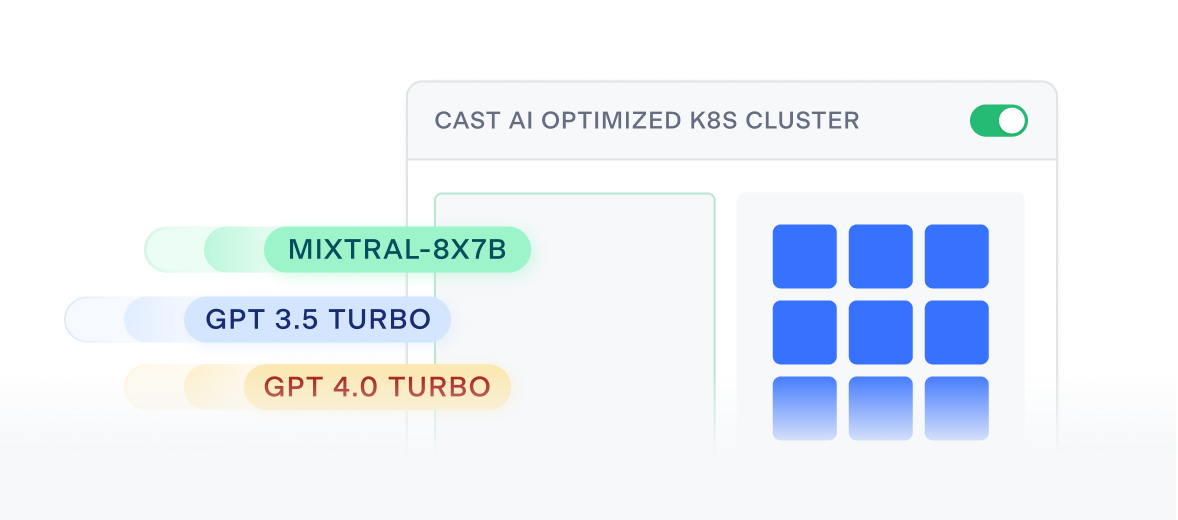

Our new AI Optimizer service automatically identifies the LLM that offers the best performance and lowest inference costs, and deploys it on CAST AI optimized Kubernetes clusters.

Trusted by the world’s leading brands

Key features

Dramatically reduce

LLM costs

Integrates with any Open AI-compatible API endpoint

The solution analyzes the cost of specific users and API keys, overall usage patterns, and other factors.

Keep your existing tech stack

The service doesn’t require swapping your technology stack or even changing a line of application code.

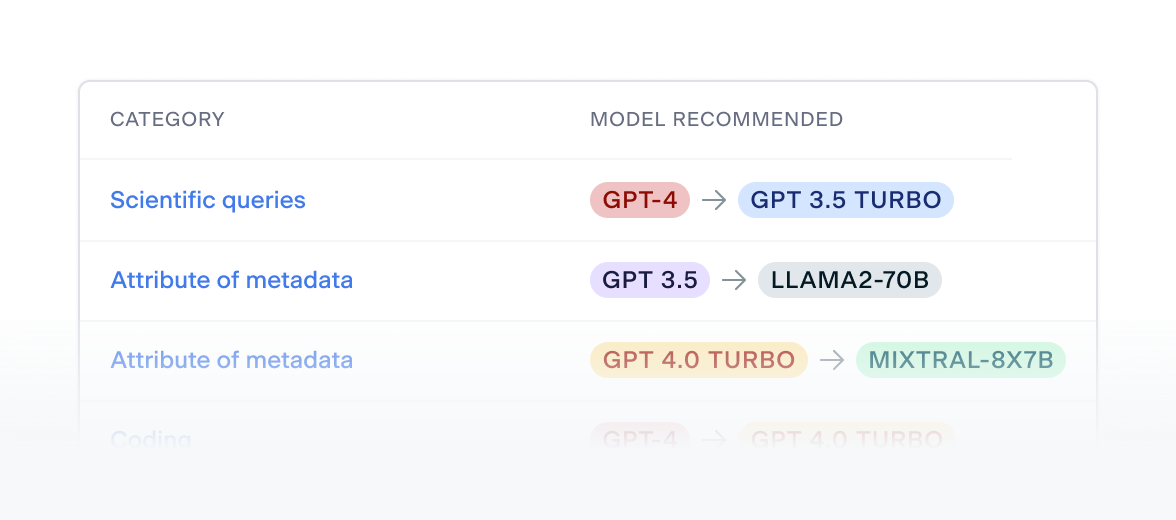

Identifies the LLM with optimal performance and lowest inference costs

Not all LLMs are created equal. AI Optimizer identifies those with the best cost, performance, and accuracy ratio.

Deploys the LLM on CAST AI-optimized Kubernetes clusters

Unlock even more Generative AI savings using cost optimization features like autoscaling or bin packing.

Gain early access to new features

The ability to automatically identify the LLM that offers the most optimal performance and the lowest inference costs is publicly available today.