Generative AI has been on everyone’s lips. Yet while impressive, GenAI and AI products in general can be costly to build and run unless you reduce your cloud bill, for example, with spot VMs.

AI products don’t have the same economic construction as other SaaS businesses. Their profit margins are typically lower, while development and execution can be far more expensive due to the need for specialized software.

In his recent post, our CTO referred to this situation, outlining ways to cut the costs of running generative and large language models. One discussed method was spot VMs, which is the most cost-effective instance option across all cloud providers.

Spot VMs can bring savings of up to 90% off on-demand pricing, but they can also get interrupted at any time with only a very short warning. You can mitigate these risks with spot VM automation and use it to save on training AI models and increase your product’s profit margins.

Read on to learn how.

Why are spot VMs good for training AI models?

All cloud providers offer spare capacity they can offer at a much lower cost than regular on-demand virtual machines. Azure and Google Cloud call this type of capacity “spot VMs”, while AWS “spot instances”, but they all refer to the same idea.

Spot VMs are an excellent choice for teams developing AI products for multiple reasons. In a nutshell, they offer a cost-effective way to access high-performance cloud resources. For example, compute-intensive workloads could use Azure Fsv2 for around one-fifth of the regular price.

Spot VMs on Azure and GCP pricing may fluctuate and vary based on available capacity. They still guarantee significantly lower prices than on-demand rates, which can come in handy if you train models on large datasets or run computationally-intensive experiments.

With spot VMs, you can access a wide variety of resources, including those optimized for CPU and GPU workloads. This flexibility allows you to choose the right instance for the job, be it training models, running inference, or developing apps.

And as spot VMs are often available in large numbers, they can add to your overall scalability. You can spin up a new spot VM easily and terminate it when you no longer need it for further cost reduction.

What are the limitations of spot VM?

Despite bringing multiple benefits to teams developing AI products, spot VMs aren’t bulletproof. Their most significant drawbacks include the following:

First of all, your cloud service provider can interrupt spot VMs at any time. Yes, you get a preemption warning, but it’s very short: 2 minutes on AWS and 30 seconds on Azure and Google Cloud.

This means that spot VMs may be unsuitable for certain workloads. For example, an interrupted, not properly saved training process in deep learning can lead to severe data loss.

| You need a way of assessing your workload’s spot-friendliness before actually moving it to a spot VM. You may find this post and cheat sheet useful. |

Secondly, spot virtual machines aren’t always available. So if you depend on one particular type of instance, you may get into trouble during mass spot interruptions or low spot availability.

Thirdly, as spot virtual machine pricing changes dynamically, there may be times when your required instance types can get more expensive. It’s then essential to set a maximum price that equals the on-demand rate to minimize the odds of interruptions.

It’s also beneficial to know where to find the cheapest resources best suited to your current workload’s needs – and that’s where automation can also help.

Spot automation in action: OpenX

Serving thousands of brands worldwide, OpenX is a leader in programmatic advertising, powering monetization and advertising revenue for publishers.

To build a real-time ad exchange helping advertisers get the highest value, the company uses Google Kubernetes Engine (GKE) to run over 200,000 CPUs across a dozen regions.

OpenX quickly saw that striking a balance between the cost of cloud infrastructure and its product revenue can be challenging. Initially, the team used the native GKE cluster autoscaler, but it quickly became insufficient as the sophistication of the infrastructure grew.

CAST AI autoscaler’s ability to handle variable pricing in real time enabled OpenX to move and run almost 100% of compute on spot VMs across different regions. This shift has unlocked sizeable savings for the company and reduced the engineering workload around configuring and provisioning cloud resources.

The spot fallback feature ensures that workloads always have a place to run by moving them to on-demand resources in case of a spot drought.

It’s a normal situation to be unable to obtain spot capacity at the moment. But the capacity situation at Google Cloud is very dynamic.

If you can’t obtain the spot capacity now, you might be able to in 10 minutes. That’s why spot fallback works great for us – we can expect CAST AI to maintain the best possible cost for the cluster by constantly attempting to replace the on-demand capacity with spot.

Ivan Gusev, Principal Cloud Architect at OpenX

How to make the most of spot VM automation

As the example from OpenX demonstrates, spot VM automation can support your AI product development in multiple ways. Let’s whizz through different CAST AI features that help to make it happen.

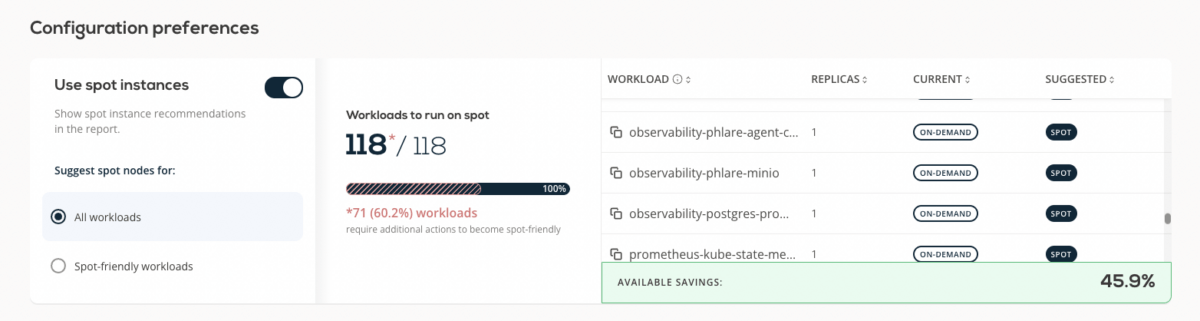

1. Discover potential savings with spot virtual machines

Not every workload is spot-friendly. CAST AI allows you to assess which of your workloads could move to spot VMs safely and how much savings such a shift would generate.

Once you connect a cluster to CAST AI, run a free Savings Report, and see projected savings in different scenarios.

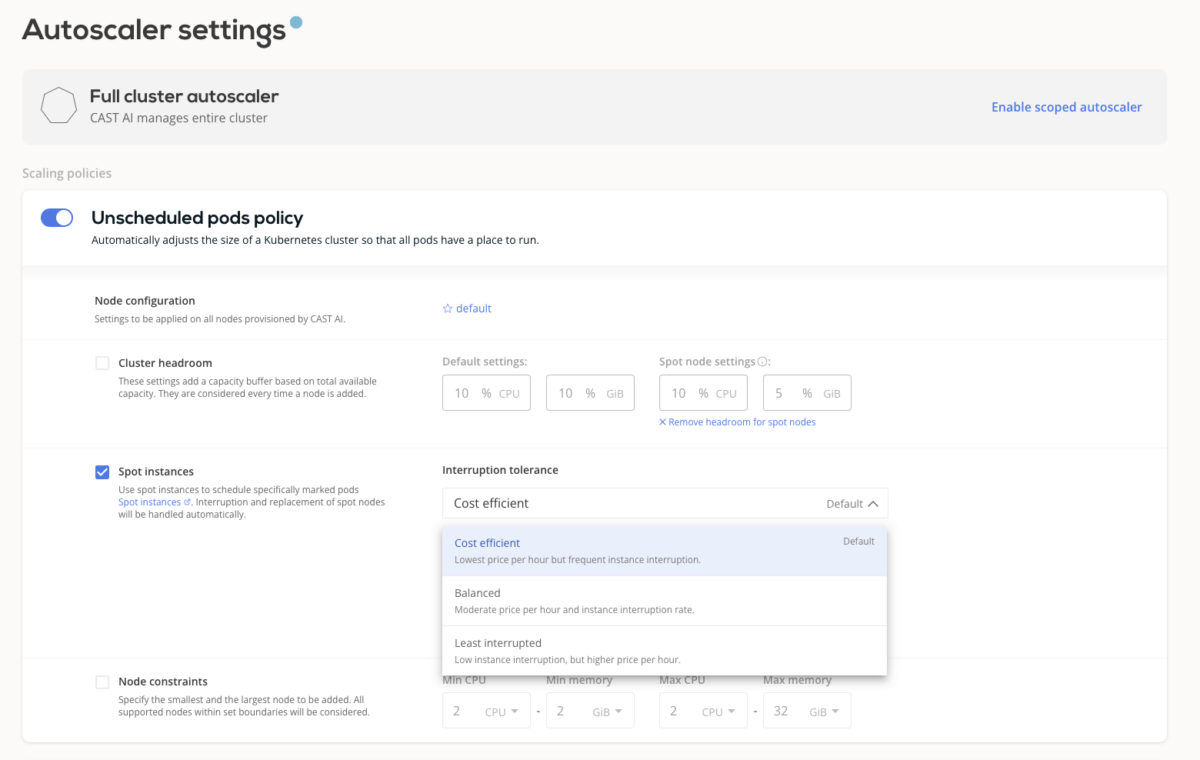

2. Automate the provisioning of spot VMs

Picking the right instance for your workload’s changing needs can be a tall order. CAST AI identifies the optimal pod configuration for your requirements and automatically selects VMs matching those criteria while choosing the cheapest instance types.

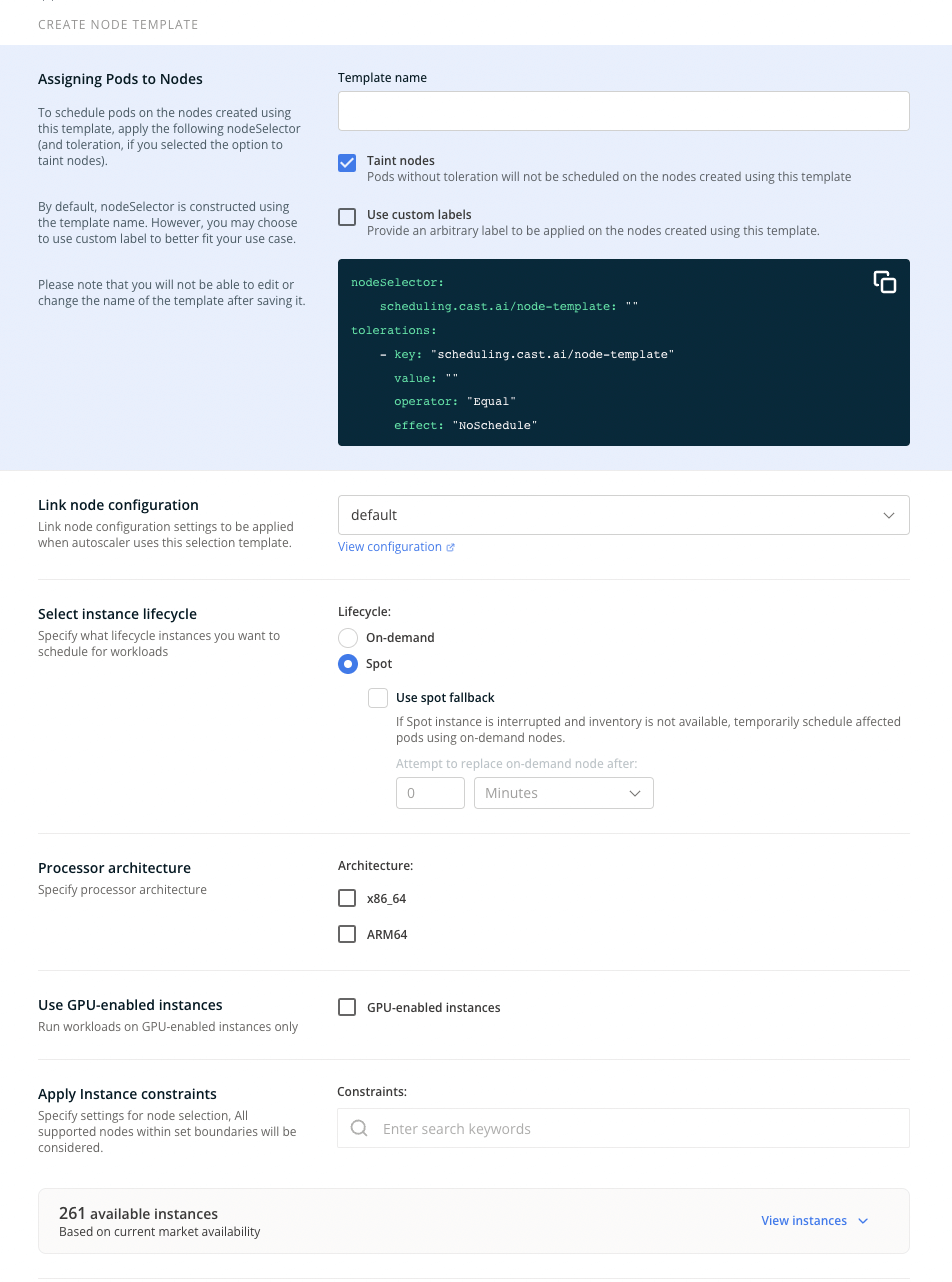

Head to Autoscaler > Settings > Unscheduled pods policy to enable spot VM automation for your cluster. Pick from the available interruption tolerance settings or specify your own requirements – more on that in the documentation.

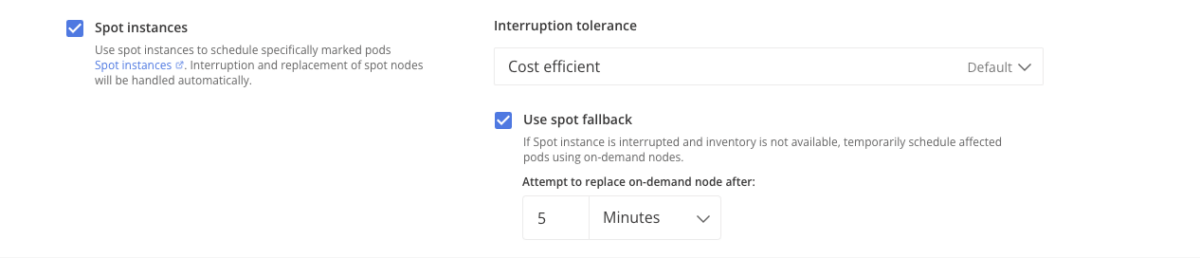

3. Fall back on on-demand instances when necessary

Running spot VMs turns problematic when no spot capacity matches your workload’s needs. CAST AI can fall back temporarily on on-demand instances until this situation changes and move your workloads back to spot VMs when these become available again.

Head to Autoscaler > Settings > Unscheduled pods policy to specify your preferences. You can learn more about spot fallback in the documentation.

4. Diversify spot VMs for fewer interruptions

Relying on limited instance types can be problematic in mass interruptions and low spot availability. A wider array of instance types reduces the odds of node interruption rate in the cluster and increases the uptime of your workloads.

CAST AI’s newest feature – Spot Diversity – aims to strike a balance between the most diverse and cheapest instance types. Find out more in the documentation.

5. Schedule new pods to spot automatically

Default Kubernetes scheduling is often too generic and expensive. Using node templates from CAST AI, you can automatically schedule new pods to spot VMs and use the fallback option to return to on-demand in case of low availability of spot virtual machines.

Find out more in the documentation.

Improve your product margin with spot VM automation

Businesses behind AI solutions often struggle with profit margins, but they can drastically improve their product cost structure with spot VMs. Offering cost-effective access to high-performance compute resources, spot VMs can accelerate your product development efforts while reducing your bill.

Using spot VM automation, you can mitigate the risks related to potential spot interruptions, generate considerable savings, and unlock new opportunities.

CAST AI clients save an average of 63%

on their Kubernetes bills

Check out what spot VM automation could do for you – book a technical demo today.

Leave a reply