The State of Kubernetes Report: Overprovisioning in Real-Life Containerized Applications

Facing the 2022 economic downturn, organizations strive to cut costs and increase their cloud computing ROI. This report shows the current state of cloud cost management for Kubernetes and shares proven cost optimization practices.

37%

Of compute capacity

is not used

>2X

More clusters could use spot instances

60%

Average savings for companies using CAST AI

One of the major drivers of wasted cloud spend is the inability to scale back once application demand drops.

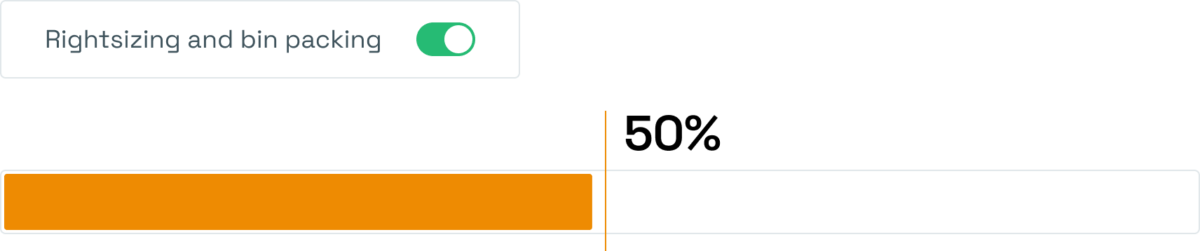

Our data shows that by eliminating this sort of overprovisioning, organizations stand to reduce their monthly cloud spend by almost 50%.

By adding spot instances to the mix, organizations cut their cloud spend by 60% on average.1

Before we share the most efficient cost-cutting methods, let’s take a look at the current cloud cost management landscape.

Cloud spending is overtaking traditional IT hardware

When asked which projects will see the highest spending growth in 2022, CIOs point first to cloud computing.2

Organizations spent $18.3 billion on cloud computing in the first quarter of 2022, an increase of 17.2% YoY, with public cloud services accounting for $12.5 billion (68%) of the total.3

IDC forecasts that cloud spending will grow by 22% compared to 2021 and reach $90.2 billion – the highest annual growth rate since 2018.4

In a survey of 753 global cloud decision makers and users, 37% reported their annual spend exceeded $12 million.5

Still, teams struggle to control the growing cloud costs

Budgeting and planning for the cloud is tricky.

13% average cloud budget overrun

32% of total cloud spend gets wasted

$4B worth of resources wasted just in Q1 2022

Companies go over their cloud budgets by an average of 13%.5 And, at the same time end up wasting 32% of their total cloud spend on average.6 This means that in the first quarter of 2022 alone, $4 billion worth of cloud resources went to waste.

The primary reasons behind cloud waste are:

- Lack of visibility into cloud usage and costs,

- Overprovisioning,

- Leaving cloud resources idle,

- Fragmentation of usage between teams and departments.

If there was ever a time to optimize costs, it’s now:

81% of IT leaders say their C-Suite directs them to reduce or avoid taking on additional cloud spending as part of the cost-cutting measures amid the current economic downturn.8

We analyzed cloud cost savings from thousands of cloud-native applications that use CAST AI. Our data does not come from a survey, as we assessed the average savings achieved using several cost optimization strategies. The most powerful one is pure rightsizing – the real-time elimination of unused CPUs in cloud-native applications.

We applied price arbitrage by asking our engine to swap high-cost VMs with equivalent lower-cost machines while keeping the same ratio of on-demand and spot instances. Finally, we analyzed the impact of moving workloads to spot instances but limited the use of spot instances to only spot-friendly ones, such as stateless high replica containers.

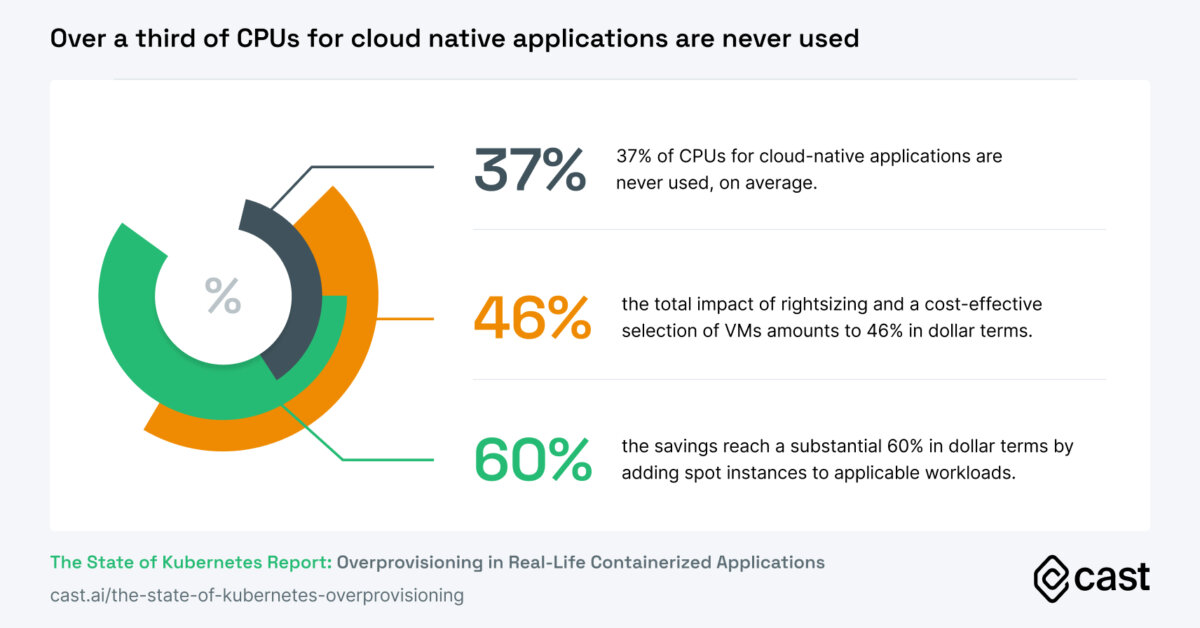

Our data suggest a higher rightsizing impact compared to previous industry surveys: 37% of CPUs for cloud-native applications are never used, on average. By optimizing clusters and removing unnecessary compute resources, CAST AI slashes the server load by 37%, freeing these resources to be used more efficiently.

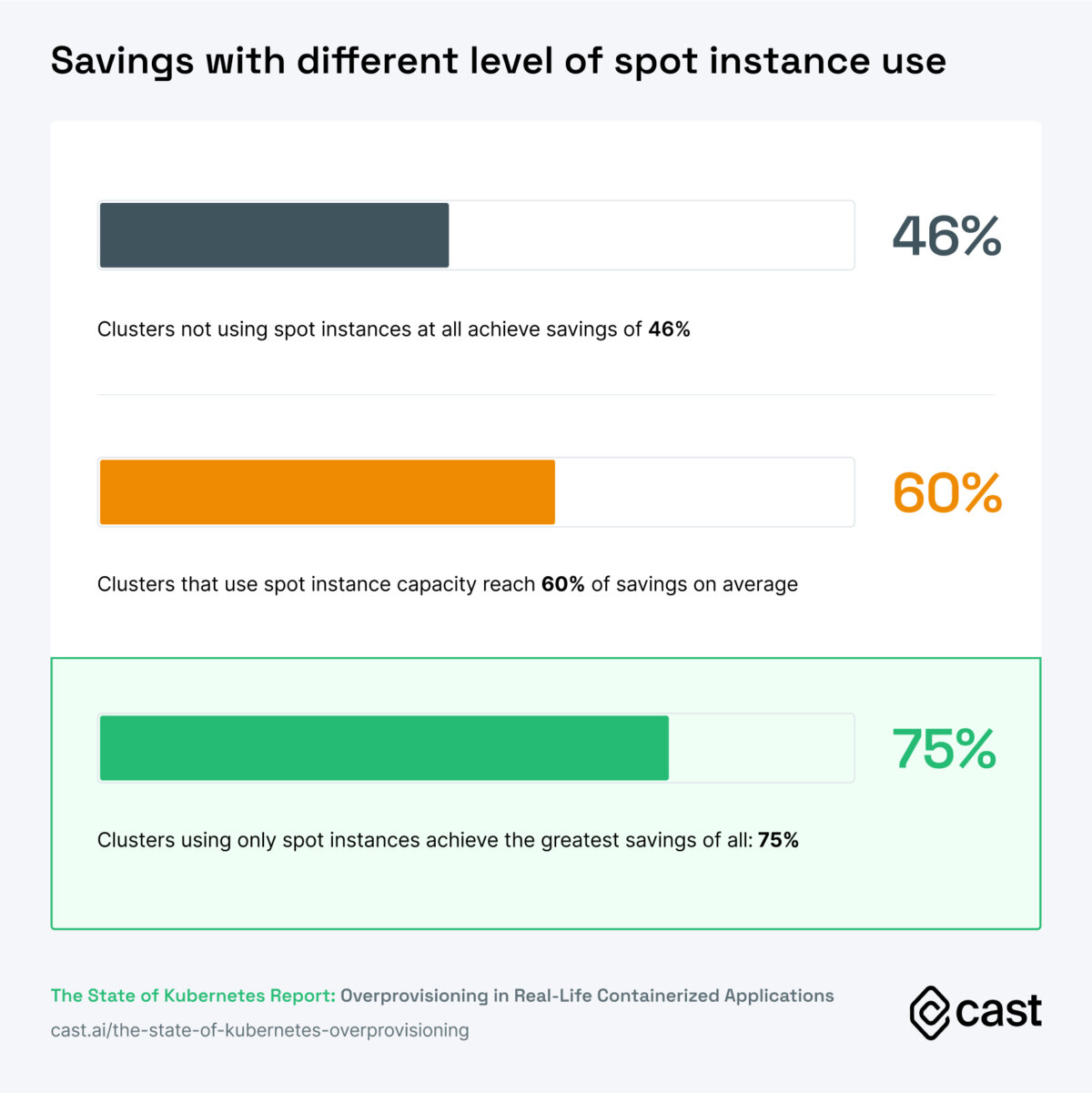

When we add pricing arbitrage, the impact of rightsizing is almost half of the cloud compute bill: the total impact of rightsizing and a cost-effective selection of VMs amounts to 46% in dollar terms.

And by adding spot instances to applicable workloads, the savings reach a substantial 60% in dollar terms, on average.

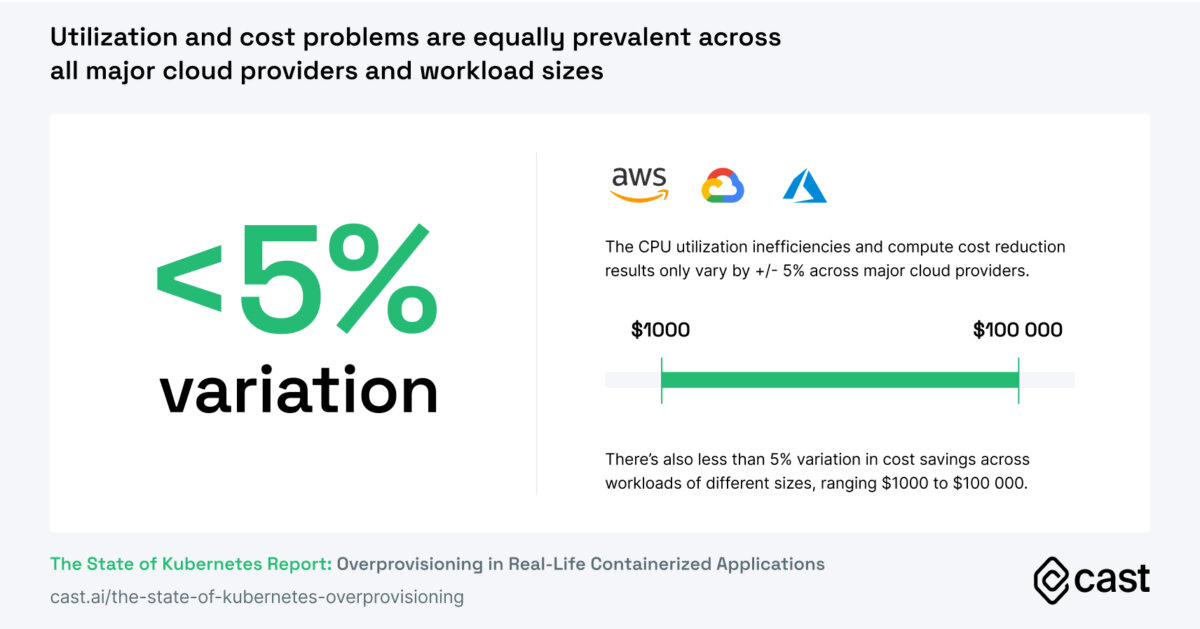

These numbers are similar across Amazon Web Services, Google Cloud Platform, and Microsoft Azure, with a variation of +/-5% across providers. Moreover, they don’t depend on the application size (we noted a variation of less than 5% between small applications of $1,000 per month to larger applications of $100,000 per month). This seems to indicate that the rightsizing problem is universal and closely related to how cloud-native applications are managed.

Wasted resources carry a significant environmental toll

Overprovisioning and resource waste have a direct impact on the natural environment. Back in 2015, a 30% decrease in power consumed by the data center would have equaled 124.86 TWh saved globally. This is enough to power the entire USA for 31.99 years!9 You can only imagine the scale of waste when translated to the current levels of data center energy consumption.

The environmental cost of running virtual machines in the cloud also relates to the choice of the region where data centers are located as some regions rely on less sustainable power sources.

CAST AI and similar end-to-end platforms can help to reduce environmental impact further as they pick newer machine types with less power-hungry CPUs, reducing the machine’s energy footprint.

How organizations reduce cloud waste to save millions on the cloud

Gaining real-time visibility into costs

of organizations said the primary reason behind cloud waste is lack of visibility into cloud usage and efficiency.10

Solving the above almost always requires using a third-party solution since cost visibility tools from cloud providers don’t display cost data in real time for anomaly detection or offer sufficient options for data sorting.

Forward-thinking FinOps, Engineering and DevOps teams use third-party cost monitoring solutions that provide full visibility into cloud costs. Building a custom monitoring tool in-house wastes internal resources.

Improving cloud resource utilization

As noted, companies provision 37% more cloud resources than they end up using, leading to massive waste both monetarily and environmentally.11

Overprovisioning is tracked by comparing CPUs provisioned vs. CPUs requested. The larger the difference between them, the more waste the team generates.

The solution to cut waste is within reach for containerized applications. At CAST AI, we’ve seen companies reduce their cloud spend by nearly 50% per month by eliminating overprovisioning alone and adding spot instances to the mix increases that by an additional 10%.12

The savings trend above persists across different industries and company sizes as seen in real-life case studies.

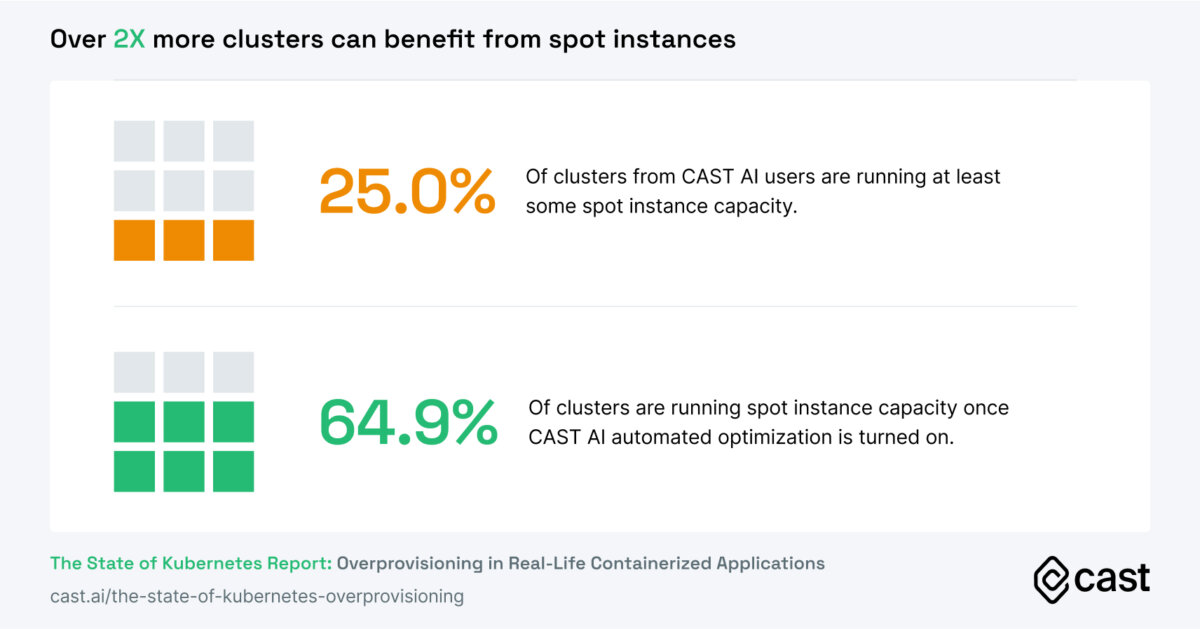

Using more spot instances

Spot instances or VMs offer a great cost-cutting opportunity, with discounts reaching even 90% off the on-demand rate.

How do teams use spot instances?

How does using spot instances translate into cost savings?

Spot-only savings are substantially higher than indicated in our previous State of Kubernetes analysis from Q1 2022. At that time, going “all spot” would impact the cost by 65%.

Find out how your cloud infrastructure compares

If your team uses Kubernetes, you don’t need to rely on averages to know where you stand with your cloud cost management measures.

CAST AI offers free real-time cost monitoring and available savings reports for unlimited clusters. Sign up and scan your clusters in minutes.

Final thoughts

The rapid expansion of the cloud-native ecosystem calls for permanent solutions that would help teams use resources more efficiently.

Kubernetes is a great innovation, but it won’t make applications more cost-efficient on its own. What organizations need are forward-thinking approaches and solutions to stop wasting cloud resources and reinvest the saved funds into their business.

Brought to you by CAST AI

CAST AI automates Kubernetes cost, performance, and security management in one easy-to-use platform for hundreds of companies of all sizes.

Data used to prepare this report:

2000+

Most active clusters

450k+

Managed CPUs

References

- [1] – This includes clusters running on on-demand resources, spot resources, and a mix of both.

- [2] – AlphaWise for Morgan Stanley, 1Q21 CIO Survey – Data Suggests IT Acceleration.

- [3, 4] – International Data Corporation, Worldwide Quarterly Enterprise Infrastructure Tracker: Buyer and Cloud Deployment.

- [5, 6, 7] – Flexera 2022 State of the Cloud Report.

- [8] – Wanclouds, 2H 2022 Cloud Cost and Optimization Outlook.

- [9] – In 2015, data centers consumed 416.2 TWh of electricity globally (source: eceee, the European Council for an Energy Efficient Economy, https://www.eceee.org/all-news/news/news-2016/2016-01-23/). The total energy consumption of the United States is 3.902 TWh (source: WorldData.info).

- [10] – Anodot, State of Cloud Cost Report 2022.

- [11, 12] – CAST AI data.