TL;DR: Terraform is an Infrastructure as Code (IaC) tool that lets engineers define software infrastructure using code. The ability to provision infrastructure that way is a powerful abstraction that allows teams to manage large distributed systems at scale.This guide dives into the topic of IaC and Terraform to show exactly what problems they solve and what new challenges they generate.

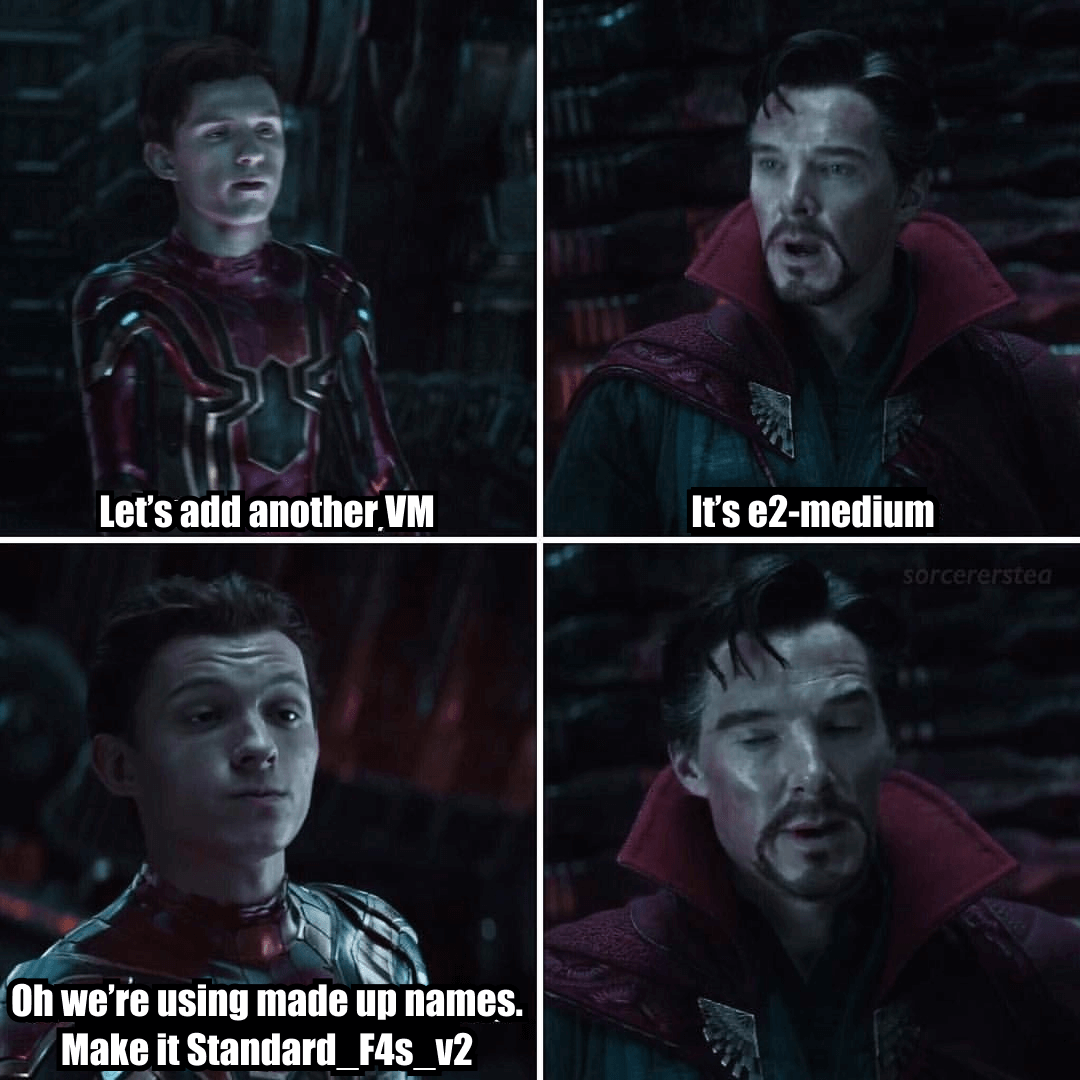

One senior DevOps guy that I recently spoke to said he would burst into tears of despair if he got a request like one in the meme below. Don’t worry, we’ve got you covered.

Enter Terraform

Sometimes products are so popular that the whole market becomes synonymous with the product’s name – walkman, photoshopped, velcro, to google, to terraform are just a few examples.

Terraform has for quite some time been de facto synonymous with Infrastructure as Code (IaC). It is an open-source tool released in 2014 that basically does one thing and does it extremely well. Terraform makes sure that IT infrastructure (real world) is consistent with the desired configuration.

In other words, Terraform is a state machine that can manage resources backed by REST API.

This won’t be a HOW TO tutorial piece that duplicates the role of documentation. I will instead share my thoughts on the WHY of Terraform.

I. LOVE. TERRAFORM. I have used it since 2016. We used Terraform to validate our product’s proof of concept at CAST AI.

There is a market challenger called Pulumi, but I don’t think anything really threatens Terraform’s domination at this point.

If you are an engineer and you haven’t heard of Terraform yet, your resume needs urgent CPR (find an hour to complete Hashicorp’s excellent tutorial).

Infrastructure as Code (IaC)

Why are DevOps engineers constantly salivating all over Infrastructure as Code? All it takes is going to the AWS console with a browser, and with a few clicks one can create any piece of infrastructure – say, an EC2 instance, VPC or EKS cluster, etc.

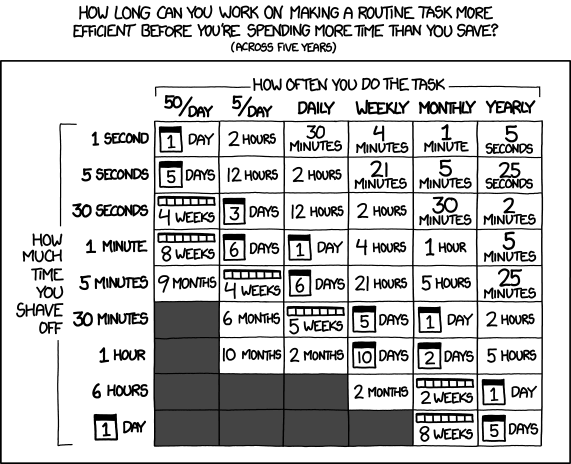

The most intuitive answer is that “automation saves time.” As engineers, we love to automate everything. T

o illustrate this point on the very edge of absurdity, read this article about an engineer that wrote scripts to do things like text his wife when he was working late (with a random reason), brew a pot of coffee just before he arrived at the coffee station and other great hacks.

Anything that could take more than 90 seconds, was a target for automation. As usual, there is an xkcd for that:

Source: https://xkcd.com/1205/

If you’re not creating and destroying cloud infrastructure on a daily basis, saving time isn’t even the deciding factor.

The problem is that DevOps engineers are, well, too human. They make human mistakes, they get distracted and tired, they fall victim to fat finger errors. They are also expensive and get demotivated when doing the same things over and over again.

Infrastructure as Code (IaC) solves these problems by providing consistency. You want your infrastructure provisioning to be deterministic.

The third big reason is that if it’s code, all the nice benefits of the CI/CD pipeline and code reviews are applicable. One engineer writes IaC while another engineer peer reviews.

In this case, a pull request should have a Terraform plan attached, rather than only HashiCorp Configuration Language (HCL). Having Terraform in CI/CD pipelines means that the DevOps engineer is free to focus on other mentally demanding work and doesn’t need to constantly check the Terraform apply status in a terminal window.

Every DevOps will take consistency and risk reduction on infrastructure change every day of the week and twice on Sunday.

There is also less demand for writing and maintaining infrastructure documentation if you have IaC, as it should never be out of date.

My acquaintance with IaC

I used to work in the traditional banking industry, which historically is a very IT risk-averse environment. But the sector also had a wake-up call. If traditional financial companies don’t start delivering innovation fast, they will be eaten by the fleet of emerging FinTech start-ups.

Business Development was baking new features following a rigorous change management process and QA sign-offs, but the following scenario occurred constantly:

It worked fine in development and staging, but production is down AGAIN!

The problem was that the Development, Staging, Production environments would naturally drift apart in configuration over time. Testing and releasing in bigger batches on a quarterly basis (those were the dark times) wouldn’t catch infrastructure and application compatibility bugs and cause business application downtime.

This problem is still relevant today even in homogeneous private clouds like Openstack, VMware Cloud Director, or single public cloud environments. The issue is compounded in a hybrid cloud or many-cloud environments.

So, how do you keep your infrastructure in check?

In my past life, we created a Terraform provider for our private cloud. A blueprint that would create servers / K8s clusters and namespaces, set RBAC, deploy middleware and databases, network enclaves, open firewalls, configure load balancers, expose applications to the internet through a security sandwich, etc.

It was one Terraform file with not too many lines of code, but abstracting 100+ of individual tasks. The same terraform configuration in a single git repository was used for Development, Staging, and Production. That was the key to avoiding configuration drift between environments.

Side note: Your IaC is as solid as your discipline to NEVER EVER change anything in infrastructure without IaC. We’ve all heard the sad proclamation:

I will just change this one setting through the cloud web console during an urgent P1 incident, it’s temporary, I will definitely revert it back.

Provider for anything

Terraform’s main strength is that anyone can write a Terraform provider for any API. A Terraform provider, also sometimes called a “plugin,” is a Go binary for Linux / Mac / Windows. Providers get automatically installed on your machine if they’re published on the official Terraform registry. You can also manually distribute/install the binary, especially if your API endpoint is not internet exposed (internal application or custom private cloud, etc.).

Today, we see 671 terraform providers published in the public registry, and there are probably countless internal ones. All cloud providers have one, major SaaS providers have them too. There are also some goofy ones that help one order a pizza, lay Google Fiber cable, create a todo list, etc.

This all means that you can have a configuration spanning multiple vendors and multiple components in a single code repo while ensuring dependencies between them. For example – use terraform to create a CAST AI Kubernetes cluster, set up an S3 bucket in AWS, install known middleware with Terraform helm chart providers, deploy your business applications with the Terraform Kubernetes provider without writing K8s YAML manifests, and finally create public DNS records.

Yup, there is a Terraform provider for CAST AI.

OK abstraction but poor generalization

Hashicorp only maintains the actual Terraform, but providers are written by API owners. This means that while providers are HCL compatible (HashiCorp Configuration Language), they don’t unify semantics between similar vendors.

It’s not really a problem if you stick to a single vendor. But it quickly becomes quite tiresome if you’re not OK with being locked-in to one commodity provider.

Every cloud service provider has to call commodities using their own very special “competitive advantage” names, and this tendency persists in their Terraform providers. For example, a Virtual Machine is called:

- EC2 instance

- Compute Engine instance

- Virtual Machine

- Droplet

Snippet from each cloud Terraform providers:

resource "aws_instance" "web" {

instance_type = "t3.micro"

}

resource “azurerm_virtual_machine” “web” {

location = “East US”

vm_size = “Standard_DS1_v2”

}

resource “google_compute_instance” “web” {

machine_type = “e2-medium”

zone = “us-east4”

}

resource "digitalocean_droplet" "web" {

region = "nyc2"

size = "s-1vcpu-1gb"

}

The same pattern gets repeated with other commodity services like VPN, VPC, Security Groups, etc. Even delving into higher stack building blocks like a PostgreSQL managed service:RDS / Cloud SQL / Azure Database for PostgreSQL server groups / Database cluster and many more.

It’s almost as if calling something by it’s true name dispels the magic of the walled garden.

I bet you have already noticed that these VM instance types aren’t exactly intuitive within the same cloud provider. But what about naming across vendors? t3.micro, Standard_DS1_v2, e2-medium, s-1vcpu-1gb. Take a look at this blog post to learn more about this.

Learning the semantics of each cloud and writing complex IaC. Who has the time for that?

IaC and Terraform: Multi-cloud provider to the rescue

To address these problems, we created and published a Terraform provider to help users create a single Kubernetes cluster spanning several clouds. Here is an example. All the details like VPC, security groups, VPN, disks, load balancers are abstracted from you. You can also easily deploy managed Kubernetes on your preferred single cloud provider, and maybe expand it later to multi-cloud when you’re ready.

Also, a cluster created with CAST AI has self-healing capabilities. If, for instance, load-balancers or K8s nodes get accidentally deleted from the cloud console by an eager junior DevOps engineer, our platform will bring it back up automatically without the need to rerun Terraform. Only the highest level resource castai_cluster information is stored in the Terraform state file, meaning that all dependencies are maintained outside of terraform.

You can also have your production CAST AI cluster together with other declarative configuration in the same IaC git repository.

If you would like to take IaC and Terraform to new heights, you could create a multi-cloud development cluster on every code Merge Request, run your end 2 end application’s tests and destroy the multi-cloud Kubernetes cluster in mere minutes. It’s not an affordable development environment, it’s dishonorably inexpensive. That’s what we do 🙂

I challenge you to try the above with CAST AI. You can sign up here and will find our Terraform documentation here.

CAST AI clients save an average of 63% on their Kubernetes bills

Connect your cluster and see your costs in 5 min, no credit card required.

Leave a reply