read free online

The 2022 Playbook of

Cloud Cost Optimization

for Kubernetes

Navigation

- The Pitfalls of Pay-Per-Use

- Why are Companies Struggling with Cloud Costs?

- How do teams keep cloud costs from spiraling out of control today?

- Manual vs. automated cloud cost optimization

- Here’s why manual optimization won’t fix your cloud spend problem

- Why we need to automate cloud cost optimization

- Automated cloud cost optimization in action

The Pitfalls of Pay-Per-Use

As great as pay-per-use sounds, it doesn’t help to control your cloud costs.

It can actually get painful:

- During the holiday season in 2018, Pinterest incurred a bill of $20 million on top of the $170 million worth of already booked AWS cloud resources.

- Around the same time, Adobe accidentally racked up $80,000 a day in unplanned charges on Azure.

Optimizing cloud costs is a balancing act many companies struggle to master. To generate real savings, you need to find the sweet spot between cost and performance in real time, following the dynamic changes in application demands, market situation, and cloud vendor pricing.

You can’t treat cloud costs like other operational expenses. Especially if they make up a large chunk of your total cost of revenue.

Luckily, you no longer have to manually micromanage your cloud infrastructure to save up on your cloud bill.

This guide takes you through all the modern approaches to cloud optimization, showing which technique brings the best results.

Why are Companies Struggling with Cloud Costs?

Jumping on the public cloud bandwagon too fast can make that wagon tip over easily.

Most teams find controlling cloud costs challenging because they never had so much freedom in spinning up new instances and experimenting with different things. Even ones that never used anything other than the public cloud struggle to control their cloud spend.

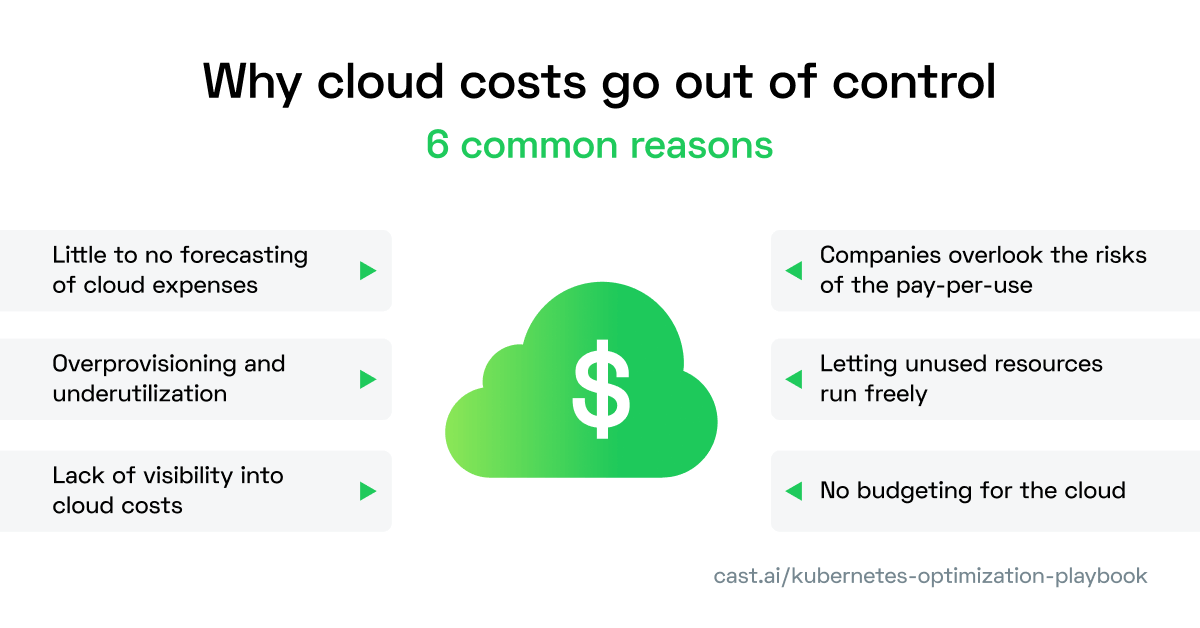

Here are some common reasons why cloud costs spiral out of control:

- Companies overlook the risks of the pay-per-use,

- Lack of visibility into cloud costs,

- No budgeting for the cloud,

- Little to none forecasting cloud expenses,

- Overprovisioning and underutilization,

- Letting unused resources run freely.

Legacy cost visibility, allocation, and management dashboards helped to solve some of these problems, but not all.

How do teams keep cloud costs from spiraling out of control today?

To gain control over their cloud expenses, companies apply various cost management and optimization solutions in tandem:

- Cost visibility and allocation – You can figure out where the expenses are coming from using a variety of cost allocation, monitoring, and reporting tools. Real-time cost monitoring is especially useful here since it instantly alerts you when you’re going over the set threshold.

A computing operation left running on Azure resulted in an unanticipated cloud charge of over $500k for one of Adobe’s teams. One alert could have prevented this. - Cost budgeting and forecasting – You can estimate how many resources your teams will need and plan your budget if you crunched enough historical data and have a fair idea of your future requirements.

This is particularly important for CFOs, who aren’t too pleased when they have to restate the quarterly results because someone left an expensive instance running for too long. Sounds simple? It’s anything but – Pinterest’s story mentioned in the introduction shows that really well. - Legacy cost optimization solutions – This is where you combine all of the information you got in the first two points to create a complete picture of your cloud spend and discover potential candidates for improvement.

Many solutions on the market can assist with that, like Cloudability or VMware’s CloudHeath. But most of the time, all they give you is static recommendations for engineers to implement manually. - Automated, cloud native cost optimization – This is the most powerful solution for reducing cloud costs you can use. This type of optimization doesn’t require any extra work from teams and results in round-the-clock savings of 50% and more, even if you’ve been doing a great job optimizing manually.

A fully autonomous and automated solution that can react quickly to changes in resource demand or pricing is the best approach here.

Manual vs. automated cloud cost optimization

Should we continue to burden engineers with all the management and optimization tasks?

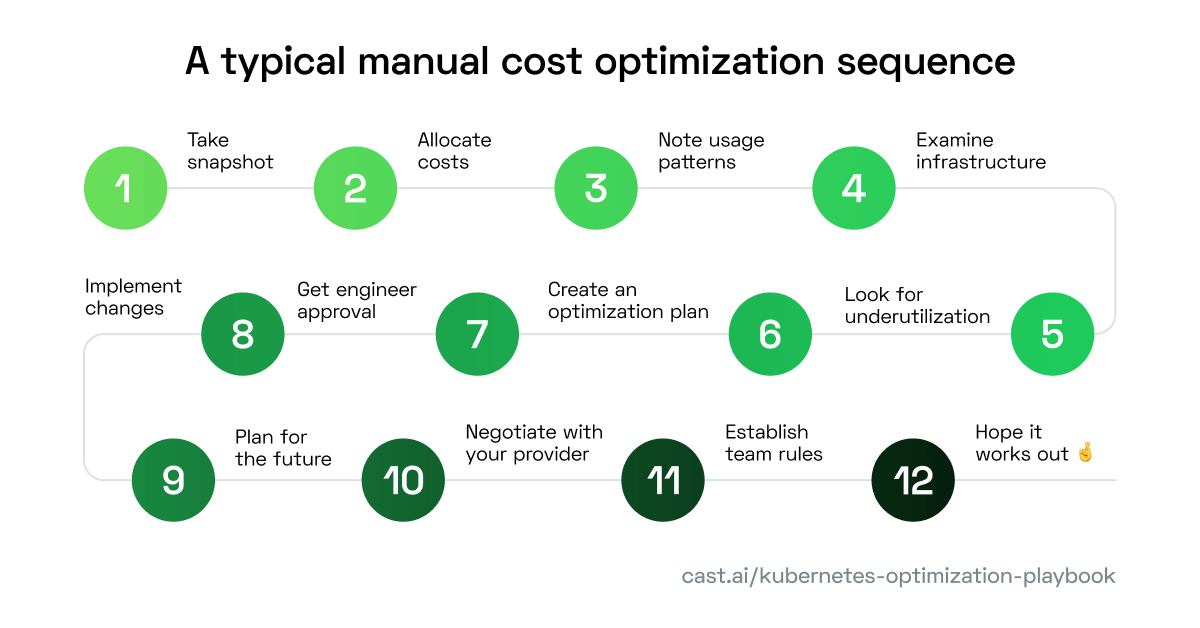

A typical manual cost optimization sequence looks like this:

- Take a snapshot of your cloud costs at a specific point in time.

- Allocate costs to teams or departments to understand where they come from.

- Identify usage and growth patterns to clarify which of the costs make sense and which ones are good candidates for optimization.

- Examine your infrastructure in-depth to check whether you could eliminate any of your costs (like abandoned projects, shadow IT projects, or unused instances that were left running).

- Examine the virtual machines and other resources used by your teams to check for overprovisioning or underutilization.

- Come up with an optimization plan and reach out to the engineering team for buy-in and confirmation.

- Also, do your best to convince engineers that costs are just as important as performance when it comes to cloud resources.

- Once everything is approved, implement the infrastructure changes.

- Now it’s time to think about the future. Analyze your requirements and start planning how you’re going to get extra capacity or remove resources you’ll no longer need.

- Take a look at your cloud provider’s offer to learn about their pricing, forecast your costs, reserve capacity upfront, or negotiate volume discounts with the vendor.

- Establish rules for teams to follow to use the discounted resources you bought to the fullest.

- And then hope that your cloud bill is as high as you expect it to be at the end of the month!

Allocating, understanding, analyzing, and forecasting cloud costs takes time. And your job isn’t done yet because you also need to apply infrastructure changes, research pricing plans, spin up new instances, and do many other things to build a cost-efficient infrastructure.

Automation can solve this for you. Here’s how many tasks it takes off your plate:

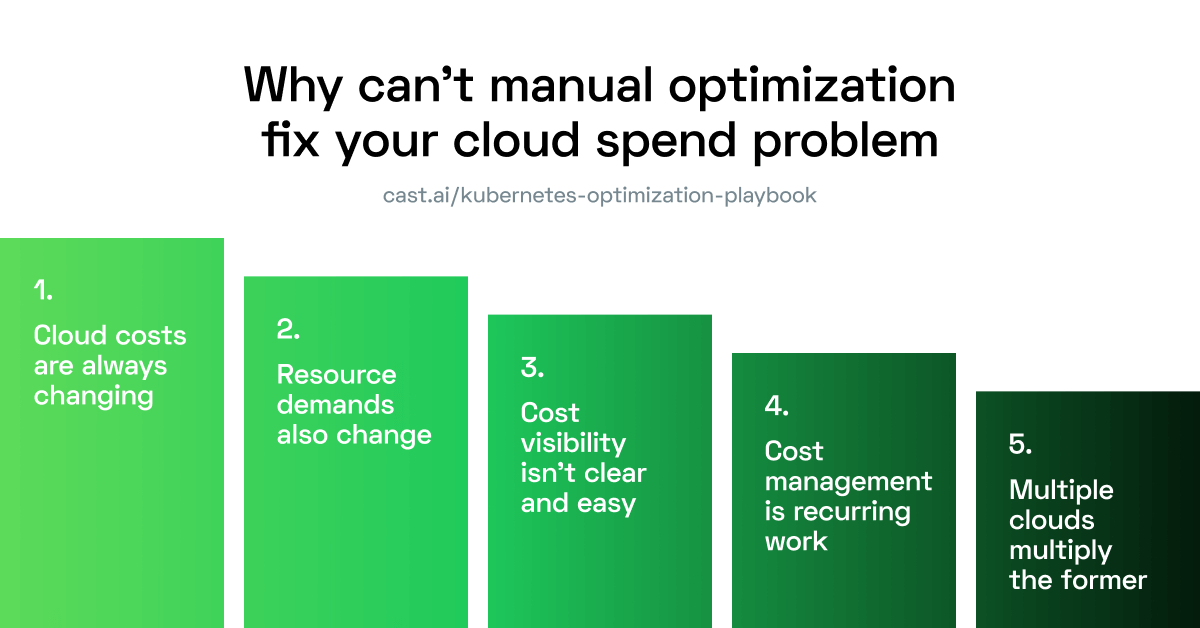

Here’s why manual optimization won’t fix your cloud spend problem

1. Cloud costs are always changing

Predicting cloud expenses is hard, even if you’re a tech giant like Pinterest. During the 2018 holiday season, the company’s cloud spend went way beyond the initial estimates due to increased usage. Pinterest had to pay AWS $20 million on top of the $170 million worth of cloud resources it already reserved.

2. Resource demands never stay the same either

Using the public cloud is all about striking the balance between cost and performance. Traffic spikes can either generate a massive and unforeseen cloud bill if you leave your check open or cause your application to crash if you put rigid limits over its resources. Cloud cost management doesn’t get you anywhere near to solving this issue.

3. Cost visibility is harder than it sounds

Decision-making about cloud spend is often decentralized in large organizations. This makes visibility more challenging than it seems. Add to that shadow IT projects popping up all over the place and you’ll have to deal with costs that can’t be explained just by taking a look at a dashboard or report.

4. Multi cloud makes cost management even more challenging

Companies that use cloud services from multiple providers need to consider the costs of several different public cloud providers at the same time. It’s like doubling or tripling the effort you’re doing for one cloud, there are no shortcuts here.

5. Cloud cost management requires manual work

And lots of it. Analyzing your setup, allocating costs to teams, understanding how much you’ve spent on what, finding better options, migrating your applications to better resources, and then checking whether it’s all working – this is what you need to do. And not once, on a regular basis.

Why we need to automate cloud cost optimization

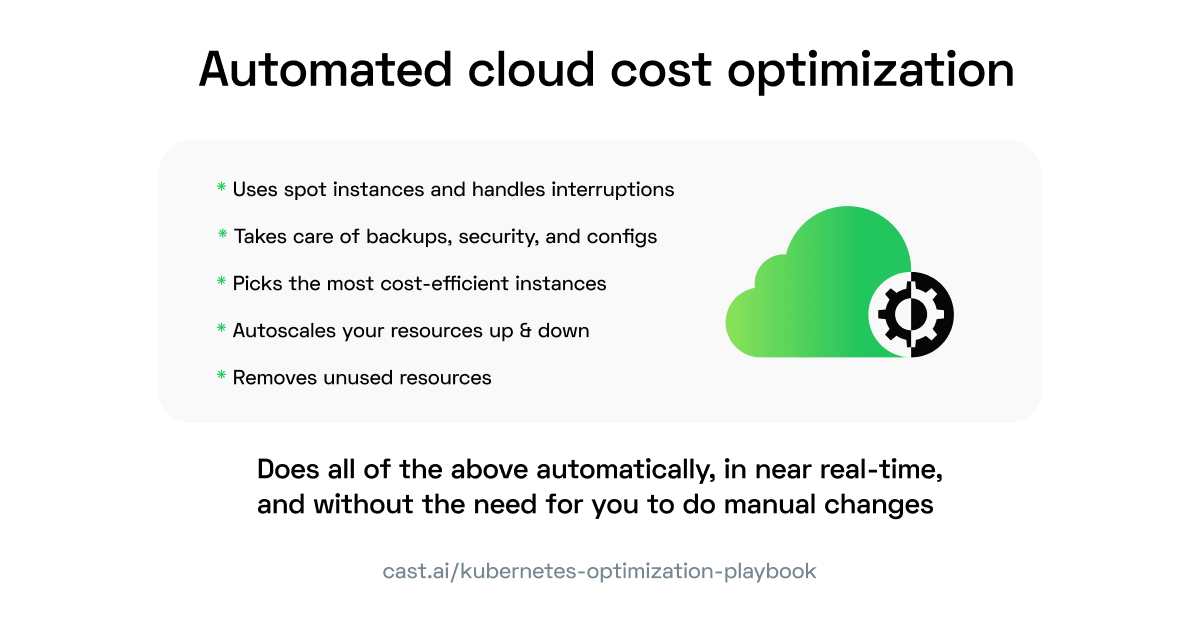

An automated solution:

- Selects the most cost-efficient instance types and sizes to match the requirements of your applications,

- Autoscales your cloud resources to handle spikes in demand,

- Removes resources that aren’t being used,

- Takes advantage of spot instances and handles potential interruptions gracefully.

- Does so much more to help you avoid expenses in other areas – it automates storage and backups, security and compliance management, and changes to configurations and settings.

- And most importantly – it applies all of these changes in real time, mastering the point-in-time nature of cloud optimization.

Not only does optimization help you achieve all of these things, but it can make the process automatic – without adding repetitive tasks for engineers. Some things just aren’t supposed to be managed manually.

Let’s look into some of the named cost optimization points to see why automation brings so much value there.

1. It makes cloud billing 10x easier

Start with the cloud bill and you’re bound to get lost

Take a look at a bill from a cloud vendor and we guarantee that it will be long, complicated, and hard to understand. Each service has a defined billing metric, so understanding your usage to the point where you can make confident predictions about it is just overwhelming.

Now try billing for multiple teams or clouds

If several teams or departments contribute to one bill, you need to know who is using which resources to make them accountable for these costs. And cost allocation is no small feat, especially for dynamic Kubernetes infrastructures. Now imagine doing it all manually for more than one cloud service!

2. Forecasting is no longer based on guesswork

To estimate your future resource demands, you need to do a few things:

- Start by gaining visibility – analyze your usage reports to learn about patterns in spend,

- Identify peak resource usage scenarios – you can do that using periodic analytics and run reports over your usage data,

- Consider other sources of data like seasonal customer demand patterns. Do they correlate with your peak resource usage? If so, you might have a chance of identifying them in advance,

- Monitor resource usage reports regularly and set up alerts,

- Measure application- or workload-specific costs to develop an application-level cost plan,

- Calculate the total cost of ownership of your cloud infrastructure,

- Analyze the pricing models of cloud providers and accurately plan capacity requirements over time,

- Aggregate all of this data in one location to understand your costs better.

Many of the tasks we listed above aren’t one-off jobs, but activities you need to engage in on a regular basis. Imagine how much time they take when carried out manually.

3. You avoid falling into the reservation trap

Reserving capacity for one or three years in advance at a much cheaper rate seems like an interesting option. Why not buy capacity in advance when you know that you’ll be using the service anyway?

But like anything else in the world of the cloud, this only seems easy.

You already know that forecasting cloud costs is hard. Even companies that have entire teams dedicated to cloud cost optimization miss the mark here.

How are you supposed to plan ahead for capacity when you have no clue how much your teams will require in one or three years? This is the main issue with products like reserved instances and savings plans.

Here are a few things you should know about reserving capacity:

- A reserved instance works by “use it or lose it” – every hour that it sits idle is an hour lost to your team (with any financial benefits you might have secured).

- When you commit to specific resources or levels of consumption, you assume that your needs won’t change throughout the contract’s duration. But even one year of commitment is an eternity in the cloud. And when your requirements go beyond what you reserved, you’ll have to pay the price – just like Pinterest did.

- When confronted with a new issue, your team may be forced to commit to even more resources. Or you’ll find yourself with underutilized capacity that you’ve already paid for. In both scenarios, you’re on the losing end of the game.

- By entering into this type of contract with a cloud service provider, you risk vendor lock-in – i.e. becoming dependent on that provider (and whatever changes they introduce) for the next year or three.

- Selecting optimal resources for reservation is complex (just check out point 3 above in this article).

4. You can rightsize virtual machines in real time

Selecting the right virtual machine size can drive your bill down by a lot if compute is your biggest expense.

But how can you expect a human engineer to do that when AWS alone has some 400 different EC2 instances alone that come in many sizes?

Similar instance types deliver different performance levels depending on which provider you pick. Even in the same cloud, a more expensive instance doesn’t always come with higher performance.

Here’s what you usually need to do when picking an instance manually:

- Establish your minimal requirements

Make sure you do it for all compute dimensions, including CPU (architecture, count, and processor choice), memory, SSD, and network connection.

- Choose the right instance type

You may select from a variety of CPU, memory, storage, and networking configurations that are bundled in instance types that are optimized for a certain capability.

- Define your instance’s size

Remember that the instance should have adequate capacity to handle your workload’s requirements and, if necessary, incorporate features such as bursting.

- Examine various pricing models

On-demand (pay-as-you-go), reserved capacity, spot instances, and dedicated hosts are all available from the three major cloud providers. Each of these alternatives has its own set of benefits and cons.

Considering that you need to do that on a regular basis, that’s a lot of work!

When you automate the above, the way it works might surprise you in a good way.

We were running our application on a mix of AWS On-Demand instances and spot instances. We used CAST AI to analyze our setup and look for the most cost-effective spot instance alternatives. We needed a machine with 8 CPUs and 16 GB.

The platform ran our workload on an instance called INF1, which has a powerful ML-specialized GPU. It’s a supercomputer that is usually quite expensive.

That must have driven the cost up! But it all became clear after we checked the current pricing. It turned out that at that time, INF1 just happened to be cheaper than the usual general-purpose compute we used.

9 days out of 10, it’s not an instance type that’s worth checking if you’re looking for cost savings. An engineer wouldn’t do it or select INF1 as an option when using a manual tool that gives you a preset list of instance types.

But when it’s the AI working, it makes sense to check for these types every single time – that’s how you can get your hands on the best price.

5. Automation scales resources instantly

If you’re running an e-commerce application, you need to prepare for sudden traffic spikes (think getting mentioned by a Kardashian on Instagram) yet scale things down when the need is gone.

Manually scaling your cloud capacity is difficult and time-consuming. You must keep track of everything that happens in the system, which may leave you with little time to explore cloud cost reductions.

When demand is low, you run the risk of overpaying. And when demand is high, you’ll offer poor service to your customers.

Here’s what you need to take care of when scaling resources manually:

- Gracefully handle traffic increases and keep costs at bay when the need for resources drops,

- Ensure that changes applied to one workload don’t cause any problems in other workloads or teams,

- Configure and manage resource groups on your own, making sure that they all contain resources suitable for your workloads.

When scaling manually, you’d have to scale up or down your resources for each and every virtual machine across every cloud service you use. This is next to impossible. And you have better things to do anyway.

That’s where autoscaling comes into play.

Autoscaling does all the tasks listed above automatically. All you need to do is define your policies related to horizontal and vertical autoscaling, and the autonomous optimization tool will do the job for you.

6. It handles spot instances for greater cost savings

spot instances are up to 90% cheaper than on-demand instances, so buying idle capacity from cloud providers makes sense.

There’s a catch, though: the provider may reclaim these resources at any time. If you’re an AI-driven SaaS, this is fine while you’re doing some background data crunching that you can delay.

But what if you need the workload to avoid the interruption? You need to make sure your application is ready for that and have a plan in place when your spot instance is interrupted.

Here’s how you can take advantage of spot instances:

- Check to see if your workload is ready for a spot instance

Will you be able to tolerate interruptions? How long will it take to finish the project? Is this a life-or-death situation? These and other questions are useful in determining if a workload is suitable for spot instances.

- Examine your cloud provider offer

Examining less popular instances is a good idea because they’re less likely to be interrupted and can run for longer periods of time. Before deciding on an instance, look at how often it is interrupted.

- Make a bid

Set the maximum price you’re willing to spend for your preferred spot instance. The rule of thumb is to set the maximum price at the level of on-demand pricing.

- Manage spot instances in groups

You’ll be able to request a variety of instance types at the same time, boosting your chances of securing a spot instance.

To make all of the above work manually, you would have to dedicate a lot of time and effort to ongoing configuration, setup, and maintenance tasks. Automation takes all the repetitive tasks off your plate.

Automated cloud cost optimization in action

For companies in sectors like AdTech, reducing the cloud bill can have a massive impact on their bottom line. A provider of enterprise-grade marketing solutions, Branch, invested in automated cloud cost optimization and saw savings at the multi-million level.

With CAST AI, our applications are using a more efficient combination of cloud services, and we have already reduced our annualized cloud costs by millions of dollars, said Mark Weiler, SVP Engineering at Branch.

Startups benefit from optimizing cloud costs at the time of rapid growth where scaling cloud resources becomes very challenging. One social media or TV mention can bring them thousands or millions in traffic of potential customers.

As explained by the CTO of a French e-commerce startup La Fourche, Martin Le Guillou:

Startups like us need to have the ability to scale really fast when we get covered by the media and suddenly lots of people start coming our way. We tried a few solutions, but none supported us properly because they couldn’t autoscale using the best nodes matching our needs. CAST AI is a good product because it offers features that aren’t available in most node autoscalers on the market. I think its autoscaling capabilities can make a real difference to an e-commerce company.

Naturally, smarter scaling leads to cost savings. La Fourche saw its bill get lower the month after implementing automated cloud resource scaling:

Getting to those savings was super simple. First, we installed the CAST AI agent that went over our setup and gave us recommendations. […] We implemented the suggestions manually and waited for results. Next month, our bill was $3k lower. And this is just the beginning; we haven’t even started to work on spot instances and node evictions yet – and we anticipate even more savings from them. – Martin Le Guillou, CTO at La Fourche

Ultimately, automation helps teams to handle public cloud infrastructure, especially as their demands grow over time and lead to greater infrastructure complexity. The media advertising platform Boostr realized stepping in with an automation solution at the right time prevents cloud management from eating up too many engineering resources:

CAST AI is a great solution for anyone that has a fairly sprawling infrastructure that is growing a little out of control and becoming difficult to manage. Turning to containers and running applications in a much more consistent fashion – without ever forgetting about the costs – is a smart move. Looking at the expertise of CAST AI in Kubernetes, we are confident that these cost savings are going to be realized. – Ryan Upton, Architect at Boostr

Want to achieve results like these? Book a demo call with CAST AI now.

Get your FREE savings report

Cut your cloud bill in half without spending hours of engineer time