Artificial Intelligence Cost Optimization: A 76% Reduction in ML Model Training Price

The POC carried out by the CAST AI team showed that the platform can generate cloud cost savings of 76%, inclusive of platform fees. These results can be achieved with similar timing and consistently high-quality output when compared to the customer’s existing production system.

Company size

25k+ employees

Industry

Pharmaceutical

Cloud services used

Amazon Elastic Kubernetes Service (EKS)

A leader in the pharmaceutical industry approached us with the goal of reducing cloud costs for running scientific machine learning (ML) models at scale. The company used ML models to run patient simulations as part of its R&D activities, running thousands of pods scaling to 10k CPUs for processing millions of patient records per batch run.

Problem: Manually-handled EC2 spot instances failed to generate the expected cost savings

The customer’s system relied on engineers’ decisions around the selection of machines for model executions and jobs.

To optimize cloud expenses, the company used EC2 spot instances for selected jobs. However, the team had no information about future pricing trends.

The ratio of EC2 spot instances to on-demand instances was chosen manually, often by trial and error. The goal here was to achieve the highest spot fulfillment rate possible at any given moment. Any failures to obtain EC2 spot instances would be handled manually.

Since the process of selecting and launching EC2 spot instances was manual, system users couldn’t schedule jobs to run in the future – for example, over the weekend. The team ran workloads “on-demand” rather than giving them flexibility to run them whenever the price was lowest. If there was no spot capacity left, the job couldn’t be restarted. All of this meant that jobs could only be run in the present moment, and errors could only be addressed as they occurred.

Solution: Automating EC2 spot instances at every lifecycle stage

The customer decided to start a Proof of Concept with CAST AI to check if the solution could address the issues.

Identifying optimal pod configuration

CAST AI identifies the optimal pod configuration for computation requirements and automatically picks virtual machines that meet workload criteria while selecting the cheapest instance families on the market.

If the platform encounters an “Out of Capacity” error, the unavailable instances get blacklisted for short bursts and attempted later. To load balance workloads dynamically, CAST AI uses Multiple Availability Zones.

Keeping workloads afloat even when no EC2 spot instances are available

If the platform cannot find any spot capacity to meet a workload’s demand, it uses its Spot Fallback feature to temporarily run the workload on On-Demand instances until spot capacity becomes available. Once that happens, CAST AI seamlessly moves workloads back to EC2 spot instances, provided that users request this behavior.

Pricing prediction for smarter workload execution planning

Using a state of the art transformer, CAST AI has pricing models that predict seasonality and trends, allowing the platform to select the best times to run a batch workload. This leads to significant cost savings if users don’t require the workloads to execute immediately.

All of these built-in advantages allow CAST AI to schedule cloud resources more effectively, which ultimately leads to a much higher spot instance fulfillment rate and better savings.

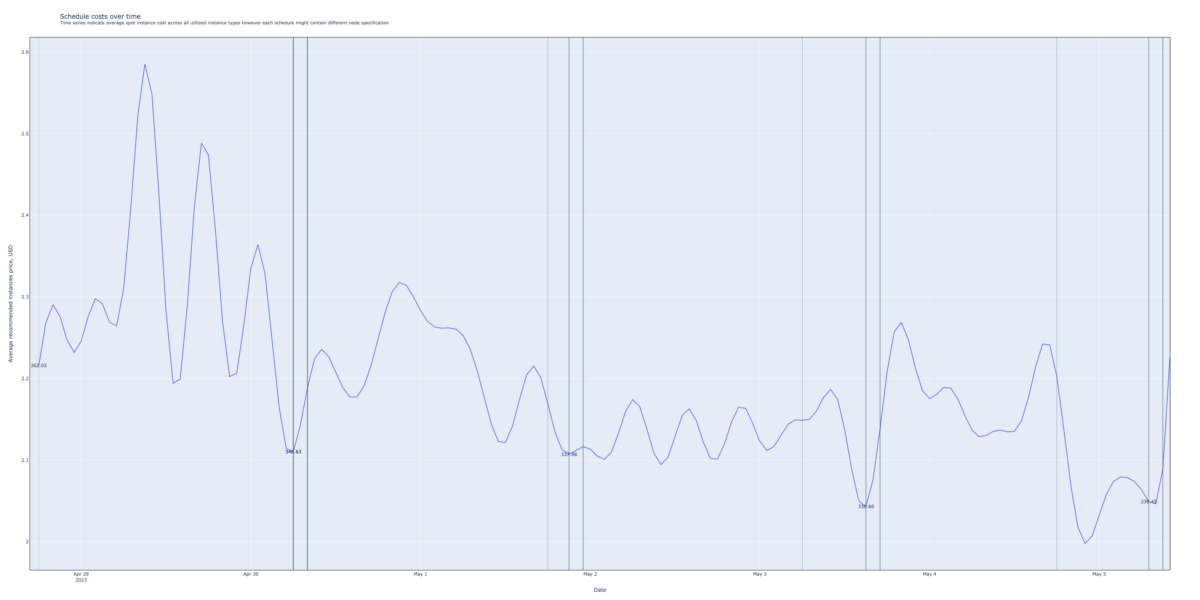

This simplified chart contains the price of single workload running and using two different types of EC2 instances. The when-to-run model calculates a price and optimal pod configuration given inputs such as CPU, RAM requests, predicted future price and others.

The X axis identifies date and the Y, the total price of single workload run hour. If you’d run the workload immediately, you’ll pay ~360$ per whole run. If you waif for couple of days – that workload run would cost you 5% less.

Result: machine learning cost savings of 76%

The POC carried out by the CAST AI team showed that the platform can generate cloud cost savings of 76%, inclusive of platform fees. These results can be achieved with similar timing and consistently high-quality output when compared to the customer’s existing production system.

Get results like this – book a demo with CAST AI now

CAST AI features used

- Spot instance automation

- Real-time autoscaling

- Instant Rebalancing

- Full cost visibility